Temporal Attentive Moment Alignment Network (TAMAN)

A Novel Method and A New Benchmark Dataset for Multi-Source Video Domain Adaptation (MSVDA)

Download

Abstract

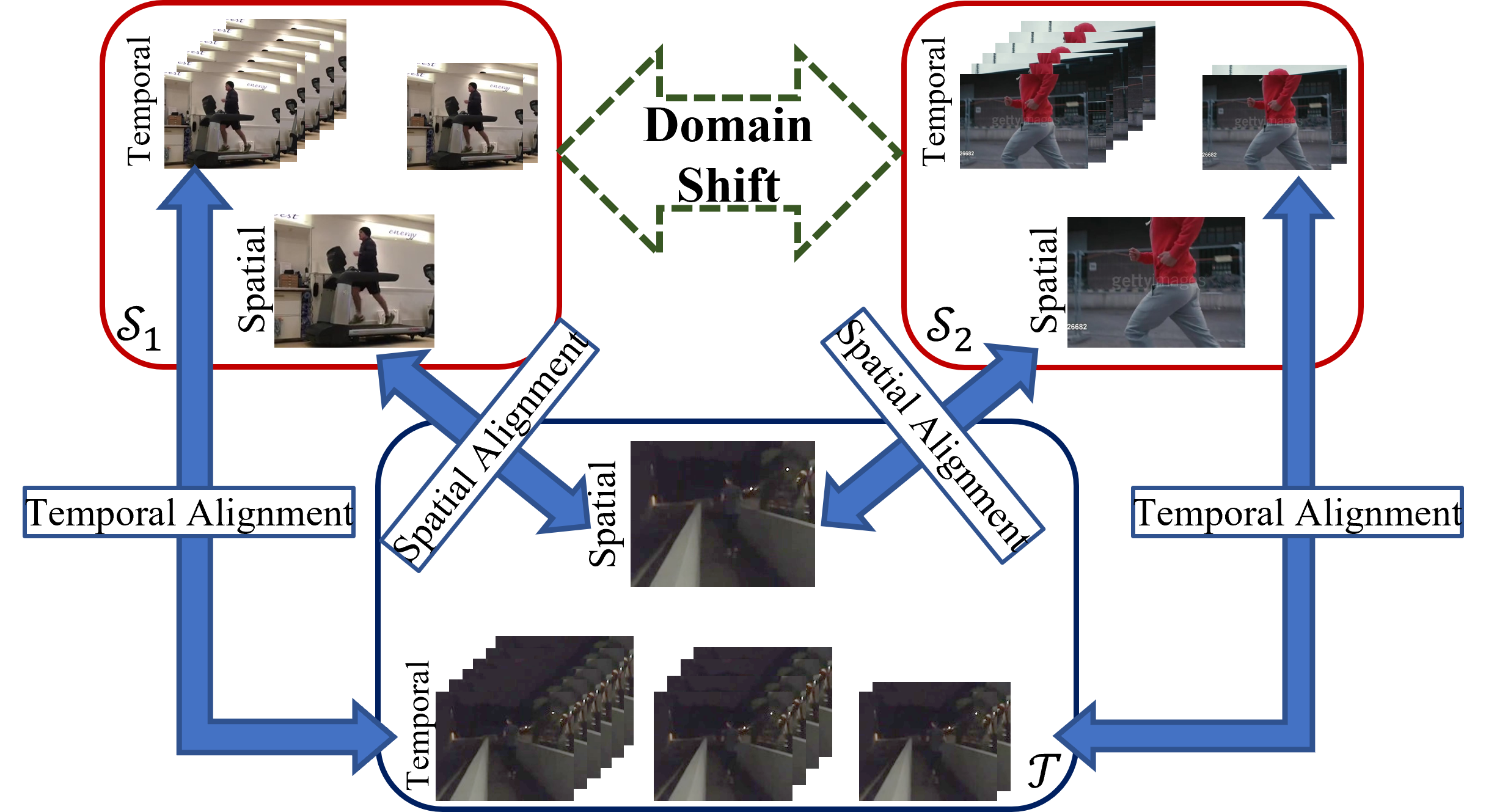

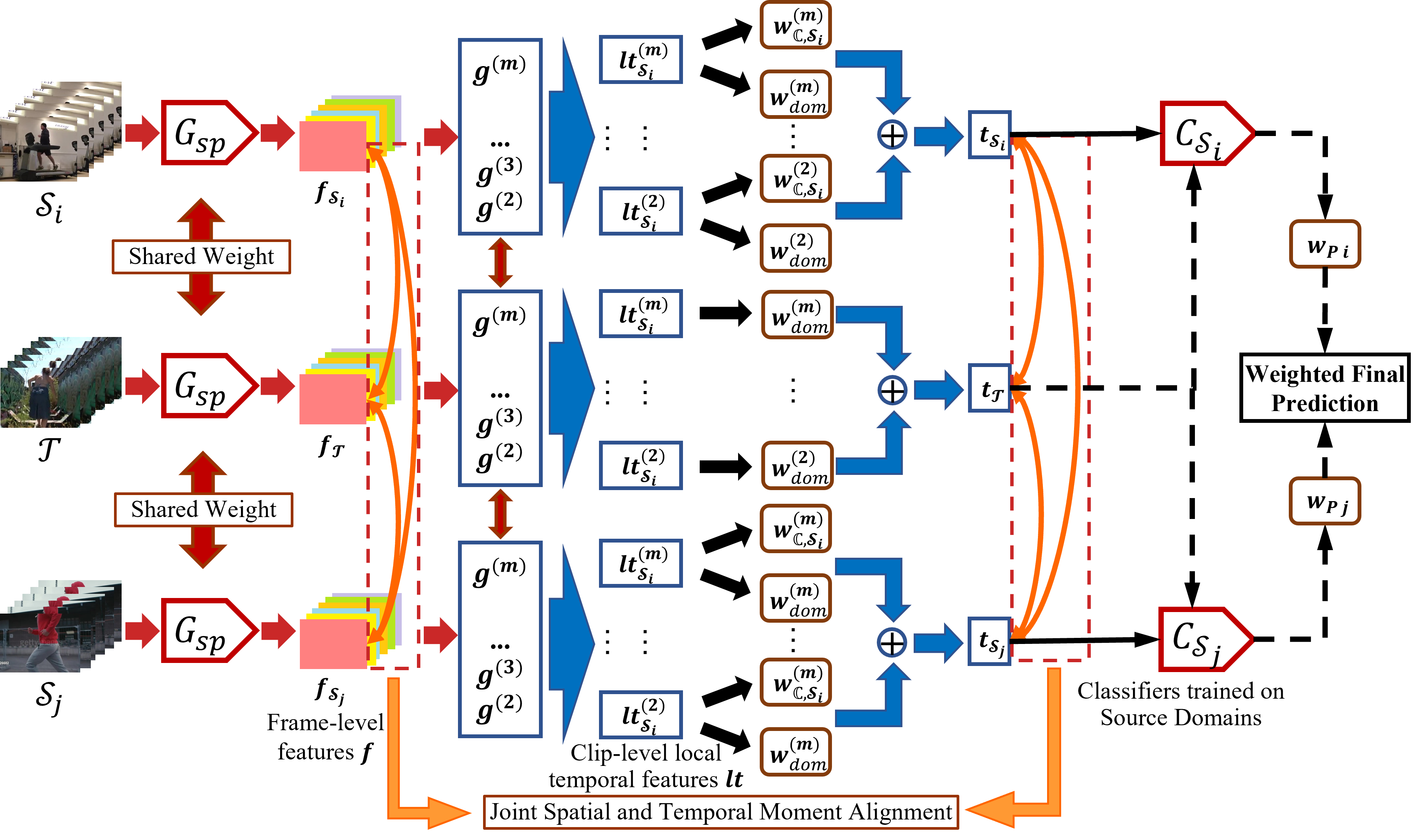

Multi-Source Domain Adaptation (MSDA) is a more practical domain adaptation scenario in real-world scenarios. It relaxes the assumption in conventional Unsupervised Domain Adaptation (UDA) that source data are sampled from a single domain and match a uniform data distribution. MSDA is more difficult due to the existence of different domain shifts between distinct domain pairs. When considering videos, the negative transfer would be provoked by spatial-temporal features and can be formulated into a more challenging Multi-Source Video Domain Adaptation (MSVDA) problem. In this paper, we address the MSVDA problem by proposing a novel Temporal Attentive Moment Alignment Network (TAMAN) which aims for effective feature transfer by dynamically aligning both spatial and temporal feature moments. TAMAN further constructs robust global temporal features by attending to dominant domain-invariant local temporal features with high local classification confidence and low disparity between global and local feature discrepancies. To facilitate future research on the MSVDA problem, we introduce comprehensive benchmarks, covering extensive MSVDA scenarios. Empirical results demonstrate a superior performance of the proposed TAMAN across multiple MSVDA benchmarks.

Structure of TAMAN

The structure of TAMAN is as follows:

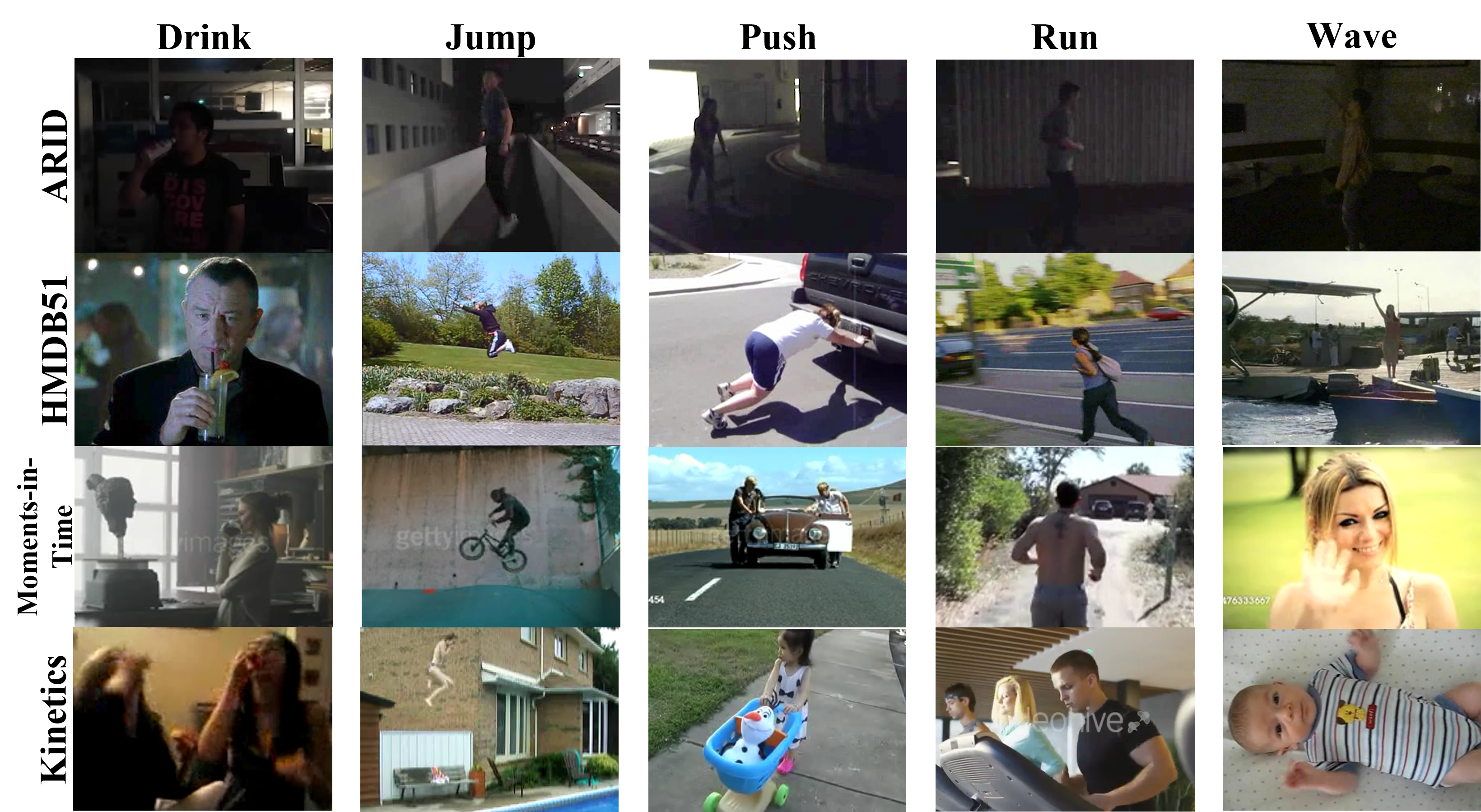

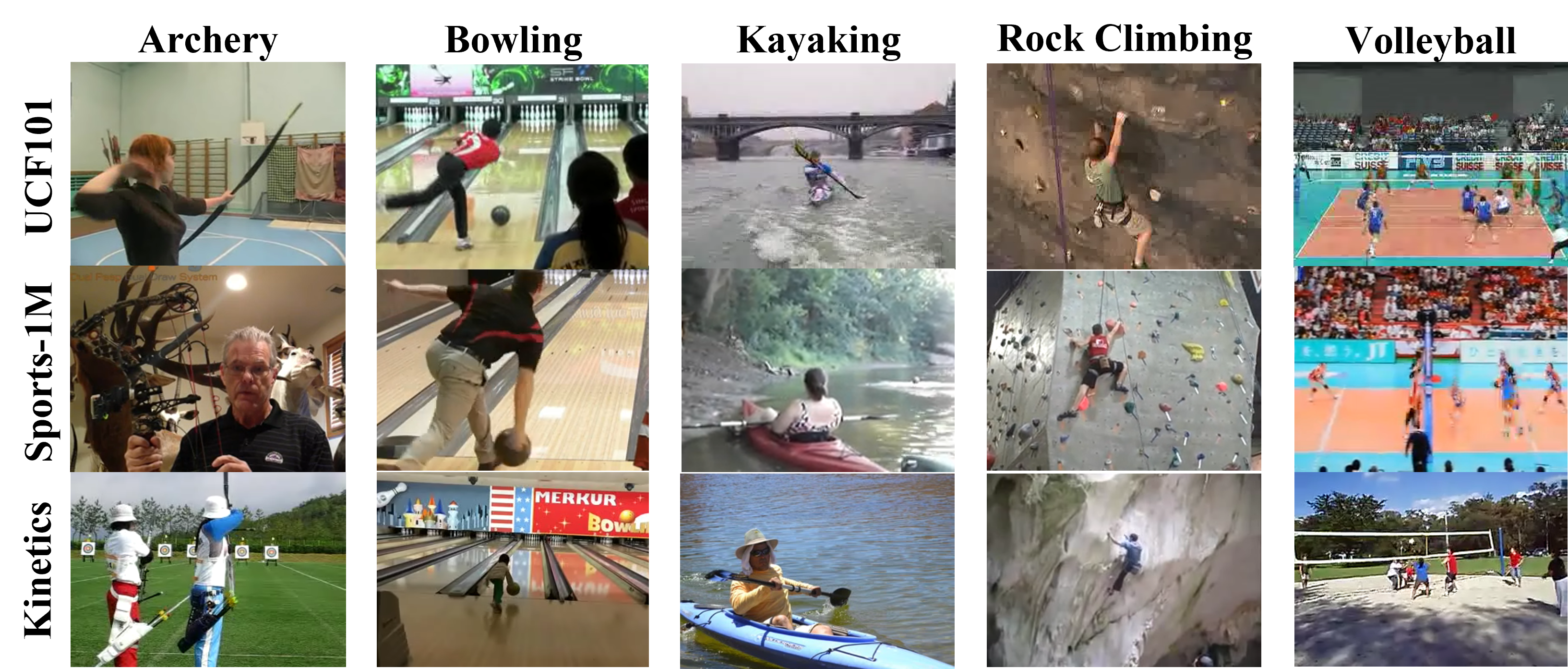

The Daily-DA and Sports-DA datasets

There are very limited cross-domain benchmark datasets for VUDA and its variant tasks. For the few cross-domain datasets available such as UCF-HMDBfull for standard VUDA and HMDB-ARIDpartial for Partial Video Domain Adaptation (PVDA), the source domains are always constraint to be a single domain. To facilitate MSVDA research, we propose two sets of comprehensive benchmarks, namely the Daily-DA and the Sports-DA datasets. Both datasets cover extensive MSVDA scenarios and provide adequate baselines with distinct domain shifts to facilitate future MSVDA research. These datasets could also be utilized as comprehensive sets of cross-domain datasets for VUDA.

-

Daily-DA The Daily-DA dataset comprises of videos with common daily actions, such as drinking and walking. It is constructed from four action datasets: ARID (A), HMDB51 (H), Moments-in-Time (M), and Kinetics (K). Among which, HMDB51, Moments-in-Time, and Kinetics are widely used for action recognition benchmarking collected from various public video platforms. ARID is a more recent dark dataset, comprised with videos shot under adverse illumination conditions. ARID is characterized by its low RGB mean value and standard deviation (std), which results in larger domain gap between ARID and other video domains. A total of 8 overlapping classes are collected, resulting in a total of 18,949 videos. When performing MSVDA, one dataset is selected as the target domain, with the remaining three datasets as the source domains, resulting in four MSVDA tasks: Daily->A, Daily->H, Daily->M, and Daily->K. The training and testing splits are separated following the official splits for each dataset.

-

Sports-DA The Sports-DA dataset contains videos with various sport actions, such as bike riding and rope climbing. It is built from three large-scale action datasets: UCF101 (U), Sports-1M (S), and Kinetics (K). Compared to Daily-DA, this dataset is much larger in terms of number of classes and videos. A total of 23 overlapping classes are collected, resulting in a total of 40,718 videos, making the Sports-DA dataset one of the largest cross-domain video datasets introduced. Its objective is to validate the effectiveness of MSVDA approaches on large-scale video data. Similar to the Daily-DA dataset, one dataset is selected as the target domain, with the remaining two datasets as the source domains when performing MSVDA, resulting in three MSVDA tasks: Sports->U, Sports->S, and Sports->K. The training and testing splits are separated following the official splits.

Sampled frames from Daily-DA and Sports-DA:

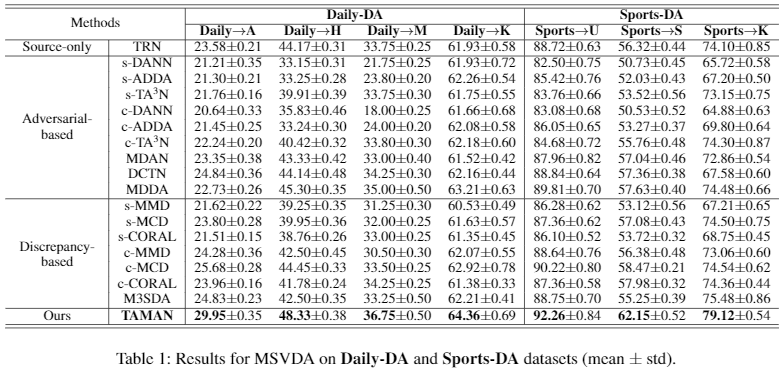

Benchmark Results

We tested our proposed TAMAN on both Daily-DA and Sports-DA, while comparing with previous domain adaptation methods. The results are as follows:

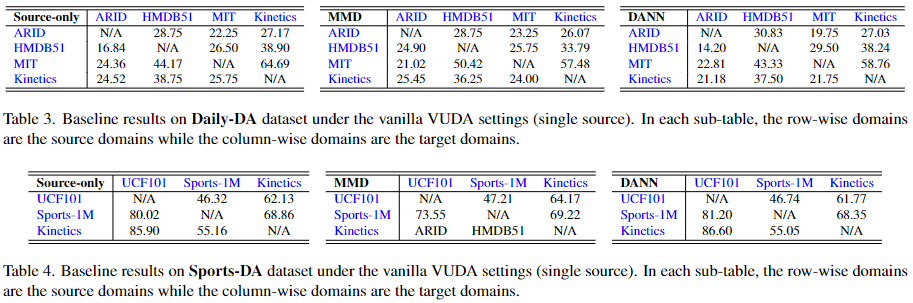

Meanwhile, both Daily-DA and Sports-DA can also be used for closed-set Video-based Unsupervised Domain Adaptation (VUDA). The results utilizing TRN as base network are as follows:

Data Download

-

To learn more about our TAMAN network and Daily-DA and Sports-DA datasets, please click Here for our paper! Our paper has been accepted by IEEE Transactions on Circuits and Systems for Video Technology (TCSVT), the IEEE version can be found Here! NEW!!

-

To obtain our Daily-DA dataset, you may need the full ARID (v1.5), HMDB51, Moments-in-Time and Kinetics-600 dataset. You may find the HMDB51 dataset Here, the ARID dataset (v1.5) Here, the Moments-in-Time Here and Kinetics-600 Here. Alternatively, you may download a full copy of HMDB51 from Google Drive, and Kinetics-600 from AmazonAWS.

-

The list of train/test split for Daily-DA can be downloaded Here. Note that the list files are organized with each line containing the VideoID, ClassID, VideoFile.

-

To obtain our Sports-DA dataset, you may need the full UCF101, Sports-1M, and Kinetics-600 dataset. You may find the UCF101 dataset Here, the Sports-1M dataset Here and Kinetics-600 Here. Alternatively, you may download a full copy of UCF101 from Google Drive, and Kinetics-600 from AmazonAWS or from OneDrive. A local version of Sports-1M can be found Here.

-

The list of train/test split for Sports-DA can be downloaded Here. Note that the list files are organized with each line containing the VideoID, ClassID, VideoFile.

-

For further enquiries, please write to Yuecong Xu at xuyu0014@e.ntu.edu.sg, Jianfei Yang at yang0478@e.ntu.edu.sg and Zhenghua Chen at chen0832@e.ntu.edu.sg. Thank you!

-

This work is licensed under a Creative Commons Attribution 4.0 International License.

- You may view the license here

How to use the train/test list

Take the Daily->A settting in Daily-DA as an example, the train list should be “hmdb51_msda_train.txt”, “kinetics_daily_msda_train.txt”, “mit_msda_train.txt” and “arid_msda_train.txt” in the “Daily-DA” folder (decompressed), where the “arid_msda_train.txt” is utilized in an unsupervised fashion. That is, the label should not be loaded during training. For testing, the test list is simply “arid_msda_test.txt”.

Citations

- If you find our work helpful, you may cite these works below: (updated)

@article{xu2023multi, title={Multi-source video domain adaptation with temporal attentive moment alignment network}, author={Xu, Yuecong and Yang, Jianfei and Cao, Haozhi and Wu, Keyu and Wu, Min and Li, Zhengguo and Chen, Zhenghua}, journal={IEEE Transactions on Circuits and Systems for Video Technology}, year={2023}, publisher={IEEE} } @inproceedings{xu2021arid, title={Arid: A new dataset for recognizing action in the dark}, author={Xu, Yuecong and Yang, Jianfei and Cao, Haozhi and Mao, Kezhi and Yin, Jianxiong and See, Simon}, booktitle={Deep Learning for Human Activity Recognition: Second International Workshop, DL-HAR 2020, Held in Conjunction with IJCAI-PRICAI 2020, Kyoto, Japan, January 8, 2021, Proceedings 2}, pages={70--84}, year={2021}, organization={Springer} } - This work also relies on previous pioneer datasets in action recognition. You may consider citing their works:

@inproceedings{kuehne2011hmdb, title={HMDB: a large video database for human motion recognition}, author={Kuehne, Hildegard and Jhuang, Hueihan and Garrote, Est{\'\i}baliz and Poggio, Tomaso and Serre, Thomas}, booktitle={2011 International conference on computer vision}, pages={2556--2563}, year={2011}, organization={IEEE} } @article{soomro2012ucf101, title={UCF101: A dataset of 101 human actions classes from videos in the wild}, author={Soomro, Khurram and Zamir, Amir Roshan and Shah, Mubarak}, journal={arXiv preprint arXiv:1212.0402}, year={2012} } @article{kay2017kinetics, title={The kinetics human action video dataset}, author={Kay, Will and Carreira, Joao and Simonyan, Karen and Zhang, Brian and Hillier, Chloe and Vijayanarasimhan, Sudheendra and Viola, Fabio and Green, Tim and Back, Trevor and Natsev, Paul and others}, journal={arXiv preprint arXiv:1705.06950}, year={2017} } @inproceedings{karpathy2014large, title={Large-scale video classification with convolutional neural networks}, author={Karpathy, Andrej and Toderici, George and Shetty, Sanketh and Leung, Thomas and Sukthankar, Rahul and Fei-Fei, Li}, booktitle={Proceedings of the IEEE conference on Computer Vision and Pattern Recognition}, pages={1725--1732}, year={2014} } @article{monfort2019moments, title={Moments in time dataset: one million videos for event understanding}, author={Monfort, Mathew and Andonian, Alex and Zhou, Bolei and Ramakrishnan, Kandan and Bargal, Sarah Adel and Yan, Tom and Brown, Lisa and Fan, Quanfu and Gutfreund, Dan and Vondrick, Carl and others}, journal={IEEE transactions on pattern analysis and machine intelligence}, volume={42}, number={2}, pages={502--508}, year={2019}, publisher={IEEE} }