Partial Adversarial Temporal Attentive Network (PATAN)

A Novel Method and A New Benchmark Dataset for Partial Video-based Domain Adaptation (PVDA)

Download

Abstract

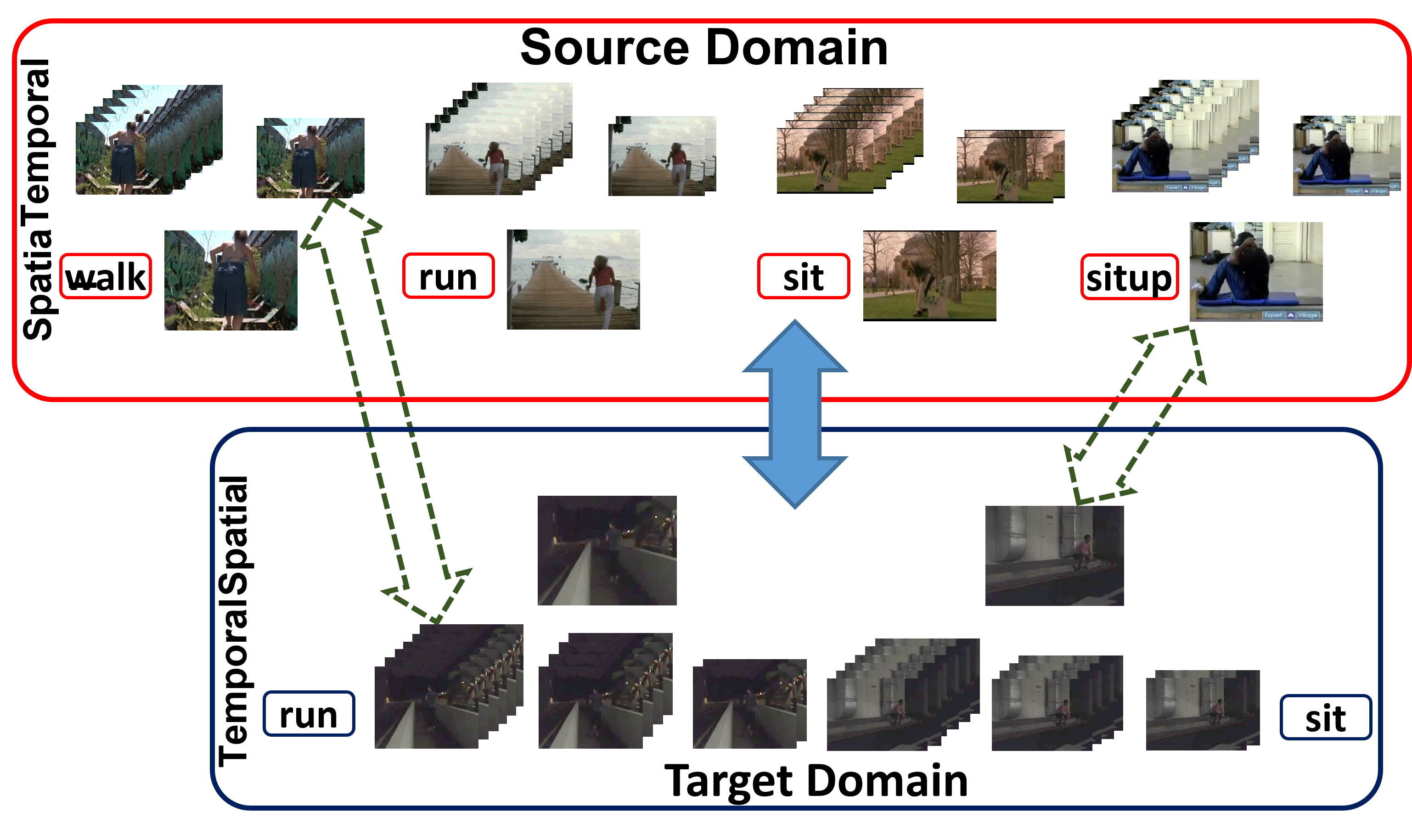

Partial Domain Adaptation (PDA) is a practical and general domain adaptation scenario, which relaxes the fully shared label space assumption such that the source label space subsumes the target one. The key challenge of PDA is the issue of negative transfer caused by source-only classes. For videos, such negative transfer could be triggered by both spatial and temporal features, which leads to a more challenging Partial Video Domain Adaptation (PVDA) problem. In this paper, we propose a novel Partial Adversarial Temporal Attentive Network (PATAN) to address the PVDA problem by utilizing both spatial and temporal features for filtering source-only classes. Besides, PATAN constructs effective overall temporal features by attending to local temporal features that contribute more toward the class filtration process. We further introduce new benchmarks to facilitate research on PVDA problems, covering a wide range of PVDA scenarios. Empirical results demonstrate the state-of-the-art performance of our proposed PATAN across the multiple PVDA benchmarks.

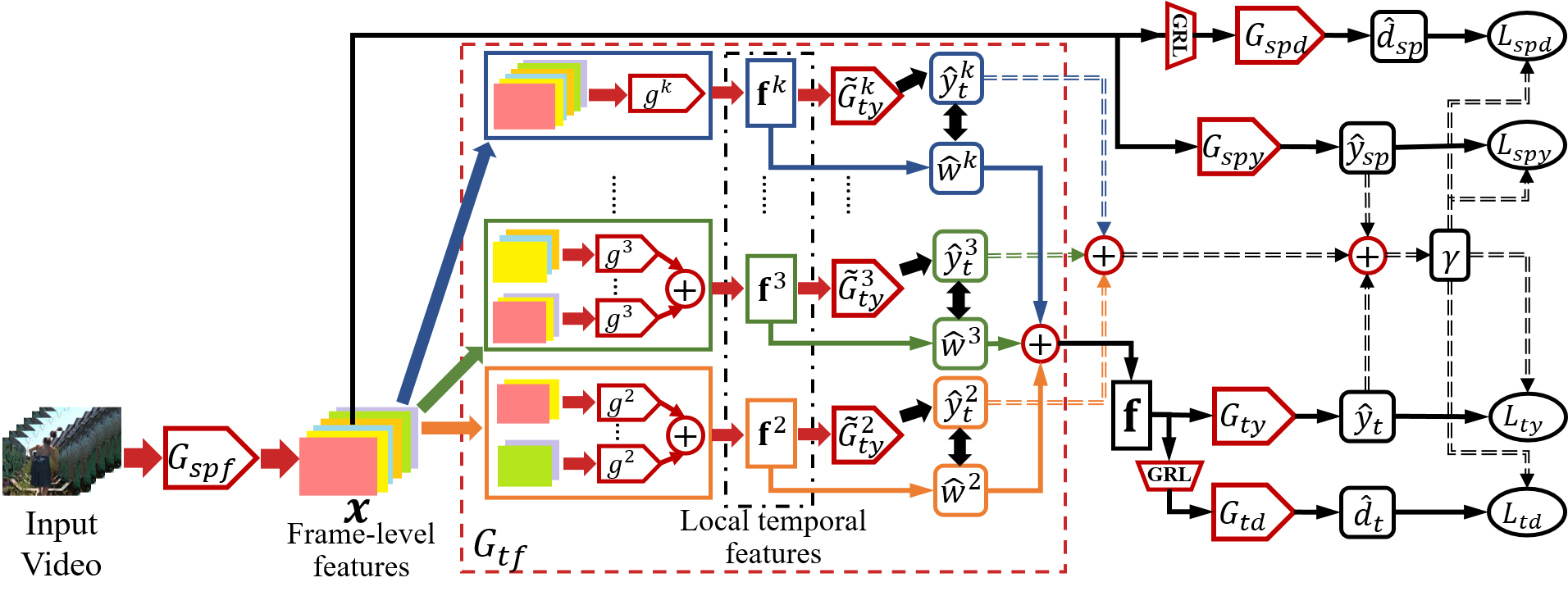

Structure of PATAN

The structure of PATAN is as follows:

Benchmark Datasets for PVDA

There are very limited cross-domain benchmark datasets for VUDA. Current cross-domain VUDA datasets are designed for the standard VUDA tasks, with the source label space constraint to be the same as target label space. To further facilitate PVDA research, we propose three sets of benchmarks, UCF-HMDBpartial, MiniKinetics-UCF, and HMDB-ARIDpartial, which cover a wide range of PVDA scenarios and provide adequate baseline environment with distinct domain shift to facilitate PVDA research.

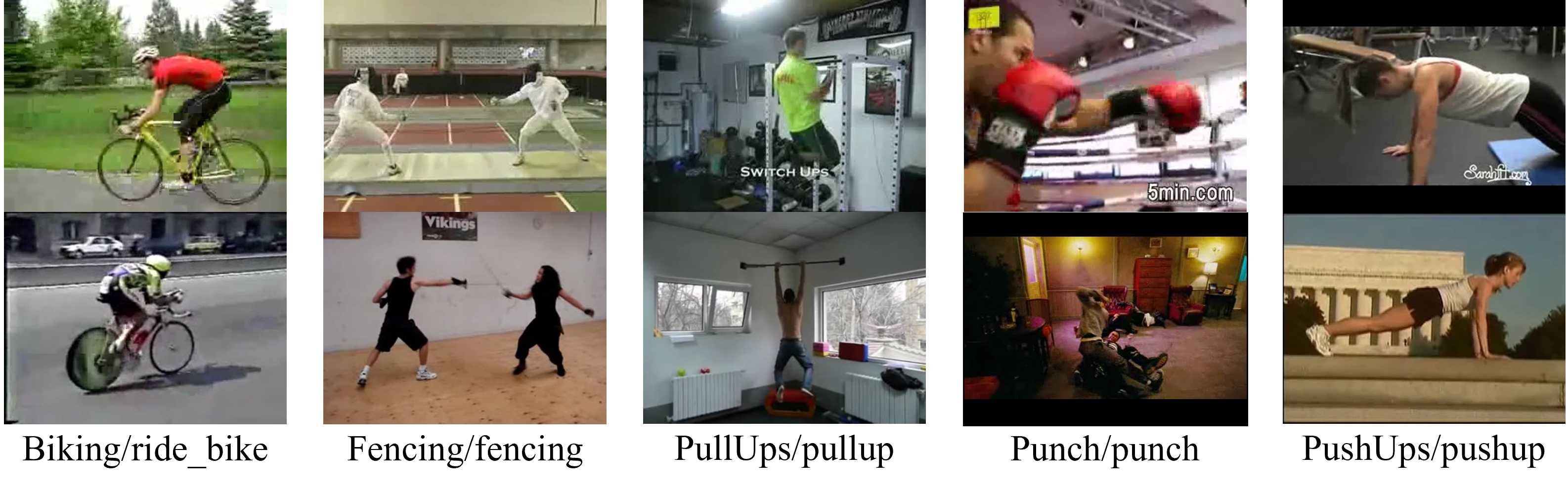

- UCF-HMDBpartial UCF-HMDBpartial is constructed from two widely used video datasets: UCF101 (U) and HMDB51 (H). The overlapping classes between the two datasets are collected, resulting in 14 classes with 2,780 videos. The first 7 categories in alphabetic order of the target domain are chosen as target categories, and we construct two PVDA tasks: U-14->H-7 and H-14->U-7. We follow the official split for the training and validation sets.

Sampled frames from UCF-HMDBpartial:

- MiniKinetics-UCF MiniKinetics-UCF is built from two large-scale video datasets: MiniKinetics-200 (M) and UCF101 (U), which contains 45 overlapping classes. Similar to the construction of UCF-HMDB\textsubscript{\textit{partial}}, the first 18 categories in alphabetic order of the target domain are chosen as target categories, resulting in two PVDA tasks: M-45->U-18 and U-45->M-18. In this dataset, there are a total of 22,102 videos, nearly 8 times larger than that of UCF-HMDBpartial. Thus this dataset could validate the effectiveness of PVDA approaches on large-scale dataset.

Sampled frames from UCF-HMDBpartial:

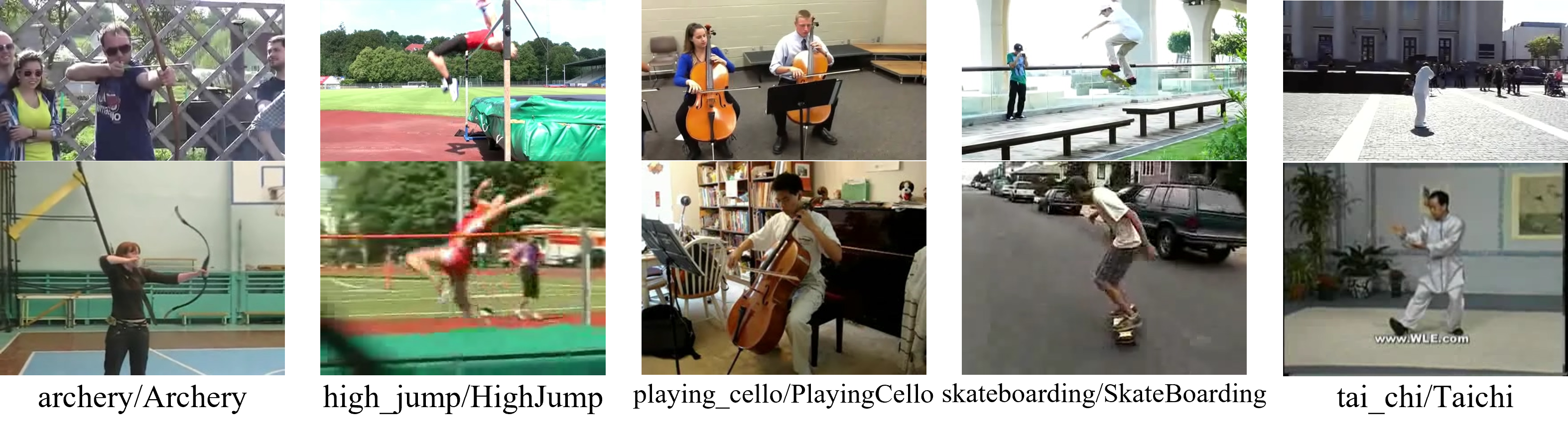

- HMDB-ARIDpartial HMDB-ARIDpartial is constructed with the goal of leveraging current video datasets to boost performance on videos shot in adverse environments. It incorporates both HMDB51 (H) and a more recent dark dataset, ARID (A), with videos shot under adverse illumination conditions. Statistically, videos in ARID possess much lower RGB mean value and standard deviation (std), which leads larger domain shift between ARID and HMDB51 compared to other cross-domain datasets. The overlapping classes between the two datasets are collected, resulting in 10 classes with 3,252 videos. The first 5 categories in alphabetic order of the target domain are chosen as target categories, resulting in two PVDA tasks: H-10->A-5 and A-10->H-5. For all the aforementioned benchmarks, the training and validation sets are separated following the official split methods.

Sampled frames from UCF-HMDBpartial:

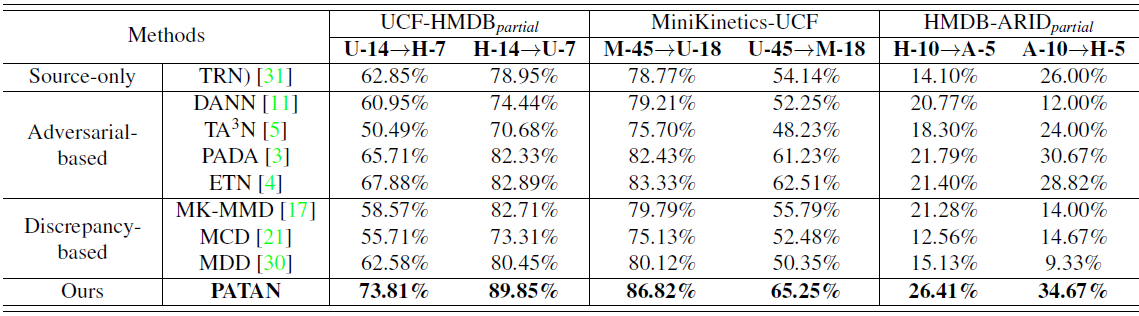

Benchmark Results

We tested our proposed PATAN on the three benchmark datasets as introduced above, while comparing with previous (partial) domain adaptation methods. The results are as follows:

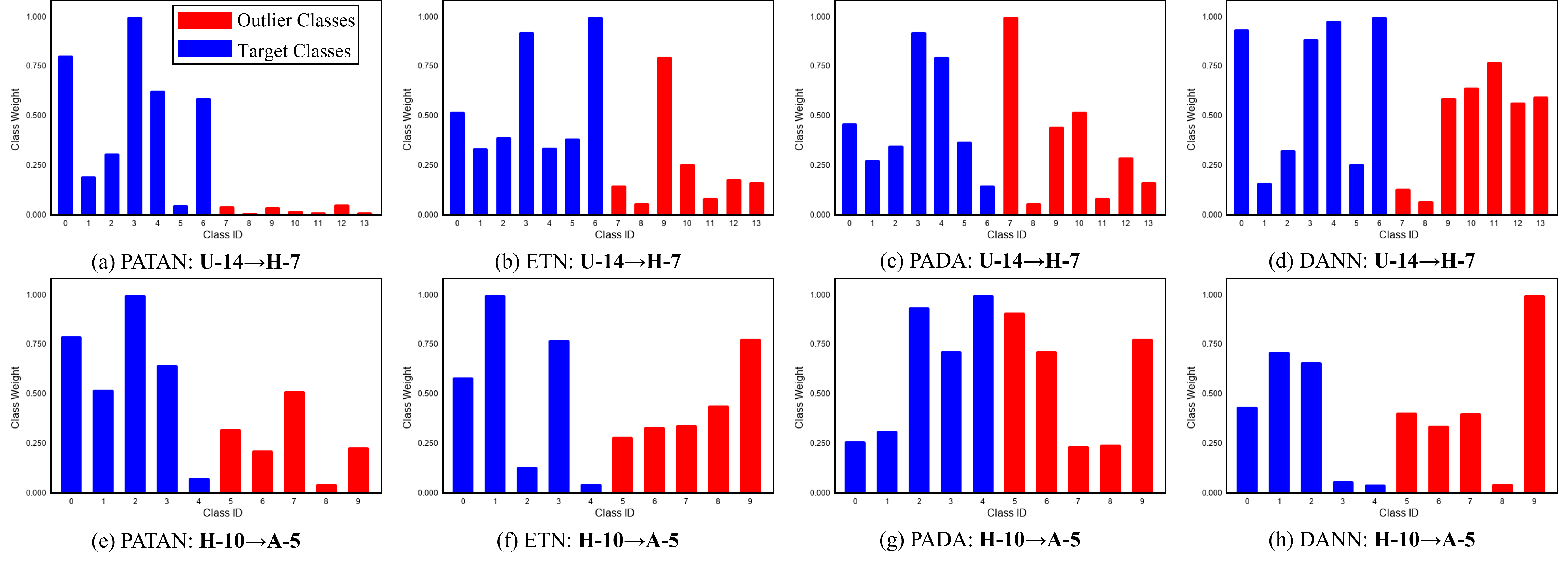

We further compared the learnt class weights on two settings, U-14->H-7 and H-10->A-5, with the results shown as follows:

Papers, Notes and Download

-

To learn more about our PATAN network and HMDB-ARID dataset, please click Here for our paper!

-

An official demo code can be found in this Github Repository, you may reference it for training and testing.

-

This paper is accepted as ICCV-Oral for ICCV 2021!!!

-

To obtain our HMDB-ARIDpartial dataset, you may need the full HMDB51 and ARID dataset. You may find the HMDB51 dataset Here and the ARID dataset Here or the compact version Here. Alternatively, you may download a full copy of HMDB51.

-

To obtain our UCF-HMDBpartial dataset, you may need the full UCF101 and HMDB51 dataset. You may find the UCF101 dataset Here. Alternatively, you may download a full copy of UCF101 from Google Drive.

-

To obtain our MiniKinetics-UCF dataset, you may need the full MiniKinetics and UCF101 dataset. You may find the Minikinetics dataset Here. Alternatively, you may download a full copy of Minikinetics from Onedrive.

-

The list of train/test splits for the three benchmark datasets can be downloaded from the following links: UCF-HMDBpartial, MiniKinetics-UCF, HMDB-ARIDpartial.

-

Note that the list files are organized with each line containing the VideoID, ClassID, VideoFile. During training, take the U-14->H-7 setting as an example (source UCF101 with 14 classes, target HMDB51 with 7 classes), “ucf101_train_uh.txt” and “hmdb51_train_uh.txt” are the train list with “hmdb51_test_uh.txt” as the test list, with the labels in “hmdb51_train_uh.txt” ignored following the unsupervised domain adaptation setting, vice versa for other dataset settings. The classes and there respective IDs for the U-14->H-7 setting can be found under “class_list_ucf101_uh.csv” and “class_list_hmdb51_uh.csv”.

-

For further enquiries, please write to Yuecong Xu at xuyu0014@e.ntu.edu.sg, Jianfei Yang at yang0478@e.ntu.edu.sg or Haozhi Cao at haozhi002@e.ntu.edu.sg. Thank you!

-

Usage of our dataset is licensed under the MIT License, you may view the license here

- If you find our work helpful, you may cite these works below:

@inproceedings{xu2021partial, title={Partial video domain adaptation with partial adversarial temporal attentive network}, author={Xu, Yuecong and Yang, Jianfei and Cao, Haozhi and Chen, Zhenghua and Li, Qi and Mao, Kezhi}, booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision}, pages={9332--9341}, year={2021} } @inproceedings{xu2021arid, title={Arid: A new dataset for recognizing action in the dark}, author={Xu, Yuecong and Yang, Jianfei and Cao, Haozhi and Mao, Kezhi and Yin, Jianxiong and See, Simon}, booktitle={Deep Learning for Human Activity Recognition: Second International Workshop, DL-HAR 2020, Held in Conjunction with IJCAI-PRICAI 2020, Kyoto, Japan, January 8, 2021, Proceedings 2}, pages={70--84}, year={2021}, organization={Springer} } - This work is licensed under a Creative Commons Attribution 4.0 International License.

- This work is inspired by previous work in Video-based Domain Adaptation. You may consider citing their work also:

@inproceedings{chen2019temporal, title={Temporal attentive alignment for large-scale video domain adaptation}, author={Chen, Min-Hung and Kira, Zsolt and AlRegib, Ghassan and Yoo, Jaekwon and Chen, Ruxin and Zheng, Jian}, booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision}, pages={6321--6330}, year={2019} } - This work also relies on previous pioneer datasets in action recognition. You may consider citing their works:

@inproceedings{kuehne2011hmdb, title={HMDB: a large video database for human motion recognition}, author={Kuehne, Hildegard and Jhuang, Hueihan and Garrote, Est{\'\i}baliz and Poggio, Tomaso and Serre, Thomas}, booktitle={2011 International conference on computer vision}, pages={2556--2563}, year={2011}, organization={IEEE} } @article{soomro2012ucf101, title={UCF101: A dataset of 101 human actions classes from videos in the wild}, author={Soomro, Khurram and Zamir, Amir Roshan and Shah, Mubarak}, journal={arXiv preprint arXiv:1212.0402}, year={2012} } @inproceedings{xie2018rethinking, title={Rethinking spatiotemporal feature learning: Speed-accuracy trade-offs in video classification}, author={Xie, Saining and Sun, Chen and Huang, Jonathan and Tu, Zhuowen and Murphy, Kevin}, booktitle={Proceedings of the European conference on computer vision (ECCV)}, pages={305--321}, year={2018} }