Adversarial Correlation Adaptation Network (ACAN)

A Novel Method and A New Benchmark Dataset for Video-based Unsupervised Domain Adaptation (VUDA)

Download

Abstract

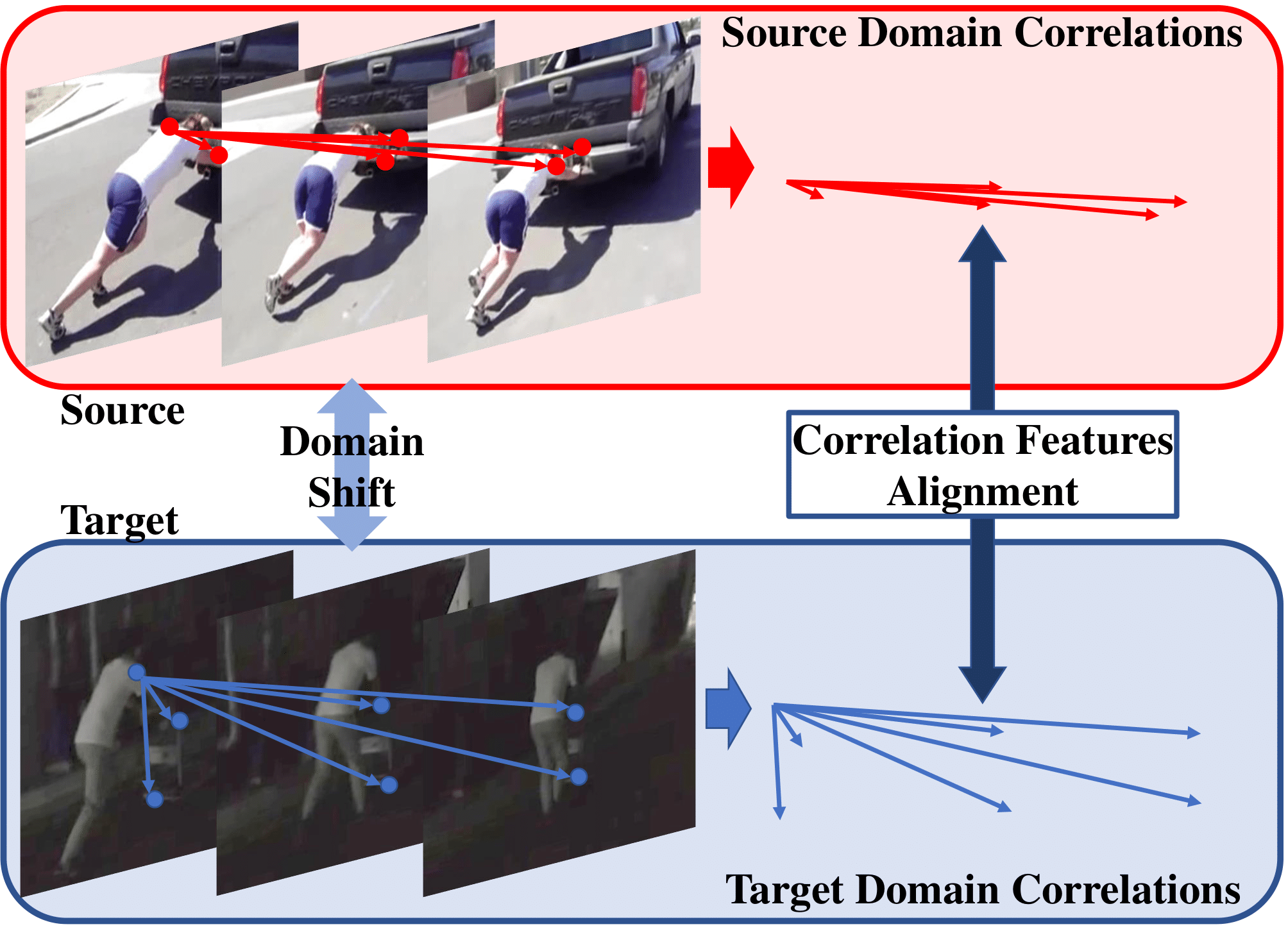

Domain adaptation (DA) approaches address domain shift and enable networks to be applied to different scenarios. Although various image DA approaches have been proposed in recent years, there is limited research towards Video-based Unsupervised Domain Adaptation(VUDA). This is partly due to the complexity in adapting the different modalities of features in videos, which includes the correlation features extracted as long-term dependencies of pixels across spatiotemporal dimensions. The correlation features are highly associated with action classes and proven their effectiveness in accurate video feature extraction through the supervised action recognition task. Yet correlation features of the same action would differ across domains due to domain shift. Therefore we propose a novel Adversarial Correlation Adaptation Network (ACAN) to align action videos by aligning pixel correlations. ACAN aims to minimize the distribution of correlation information, termed as Pixel Correlation Discrepancy (PCD). Additionally, VUDA research is also limited by the lack of cross-domain video datasets with larger domain shifts. We, therefore, introduce a novel HMDB-ARID dataset with a larger domain shift caused by a larger statistical difference between domains. This dataset is built in an effort to leverage current datasets for dark video classification. Empirical results demonstrate the state-of-the-art performance of our proposed ACAN for both existing and the new VUDA datasets.

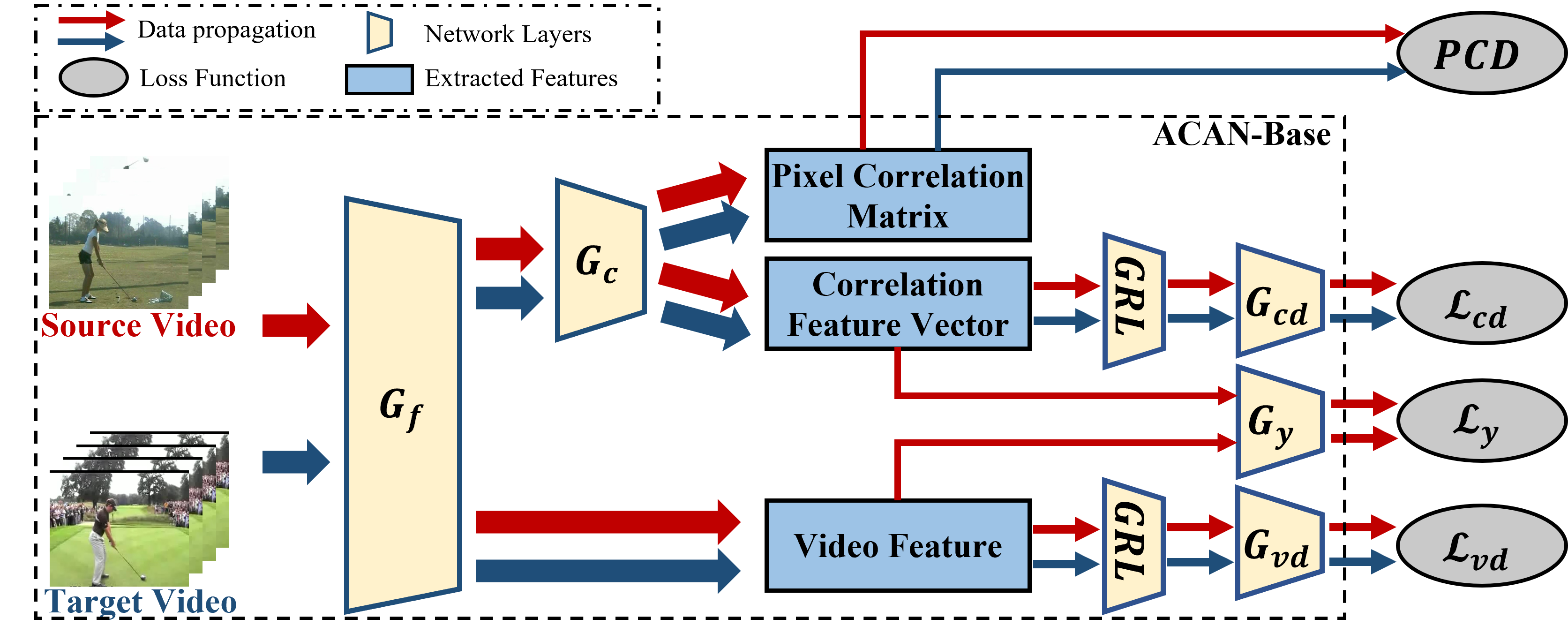

Structure of ACAN

The structure of ACAN is as follows:

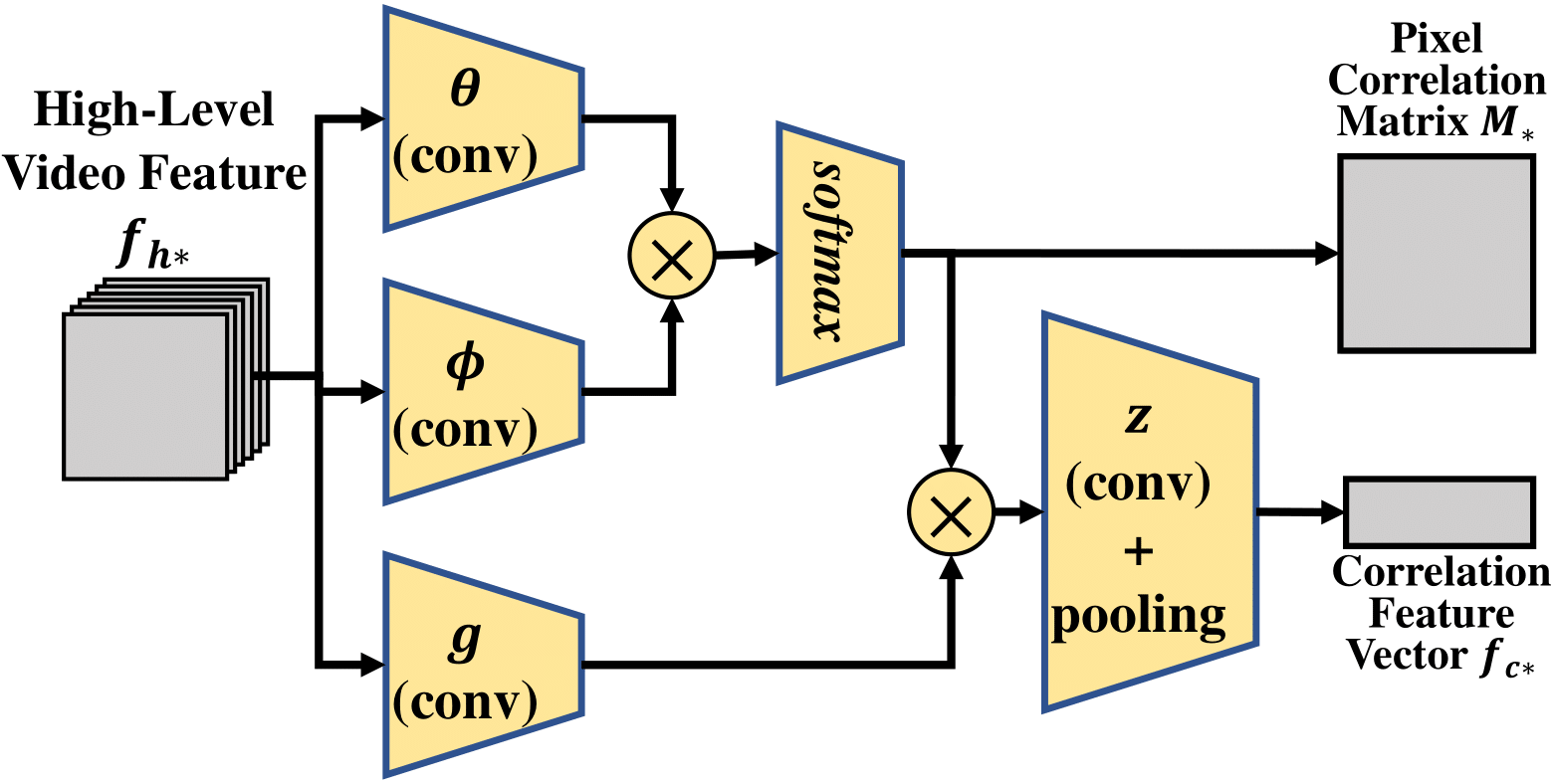

The correlation extraction is computed with the following structure:

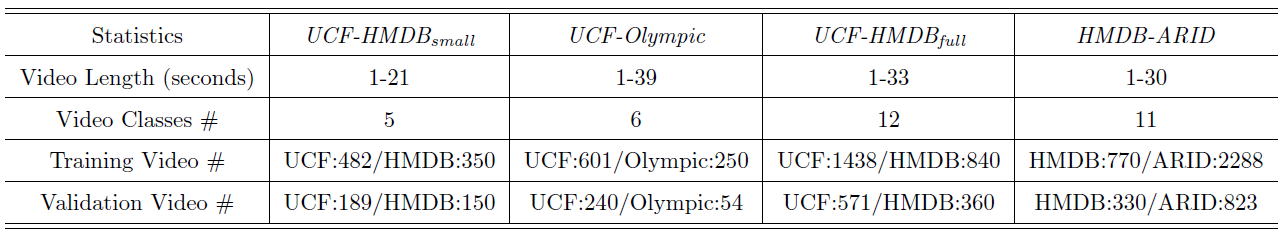

The HMDB-ARID dataset for VUDA

There are rather limited cross-domain benchmark datasets for VUDA tasks, therefore hindering the research for VUDA. More recently, larger cross-domain video datasets, such as UCF-HMDBfull have been introduced with larger domain discrepancies. Though larger cross-domain datasets are introduced, both domains included in these datasets are still based on current well-established action recognition datasets. These action recognition datasets may include different classes with different videos, yet most of them are collected on public video platforms, leading to similar video statistics among these datasets which suggest high probability of similar scenarios exist among current action recognition datasets. Thus the domain shift between these datasets may not be significant. Consequently, the difficulty of adapting the same model across the different domains with similar video statistics or similar scenarios may be trivial. VUDA approaches that perform well in these cross-domain video datasets may not be well applicable in real-world applications where the gap between domains may be much larger than current cross-domain datasets. We argue that VUDA approaches would be more useful for bridging with video domains with large distribution shifts, such as dark videos (adverse illumination) or hazy videos (adverse contrast).

We compare our HMDB-ARID dataset statistically with other commonly used VUDA datasets:

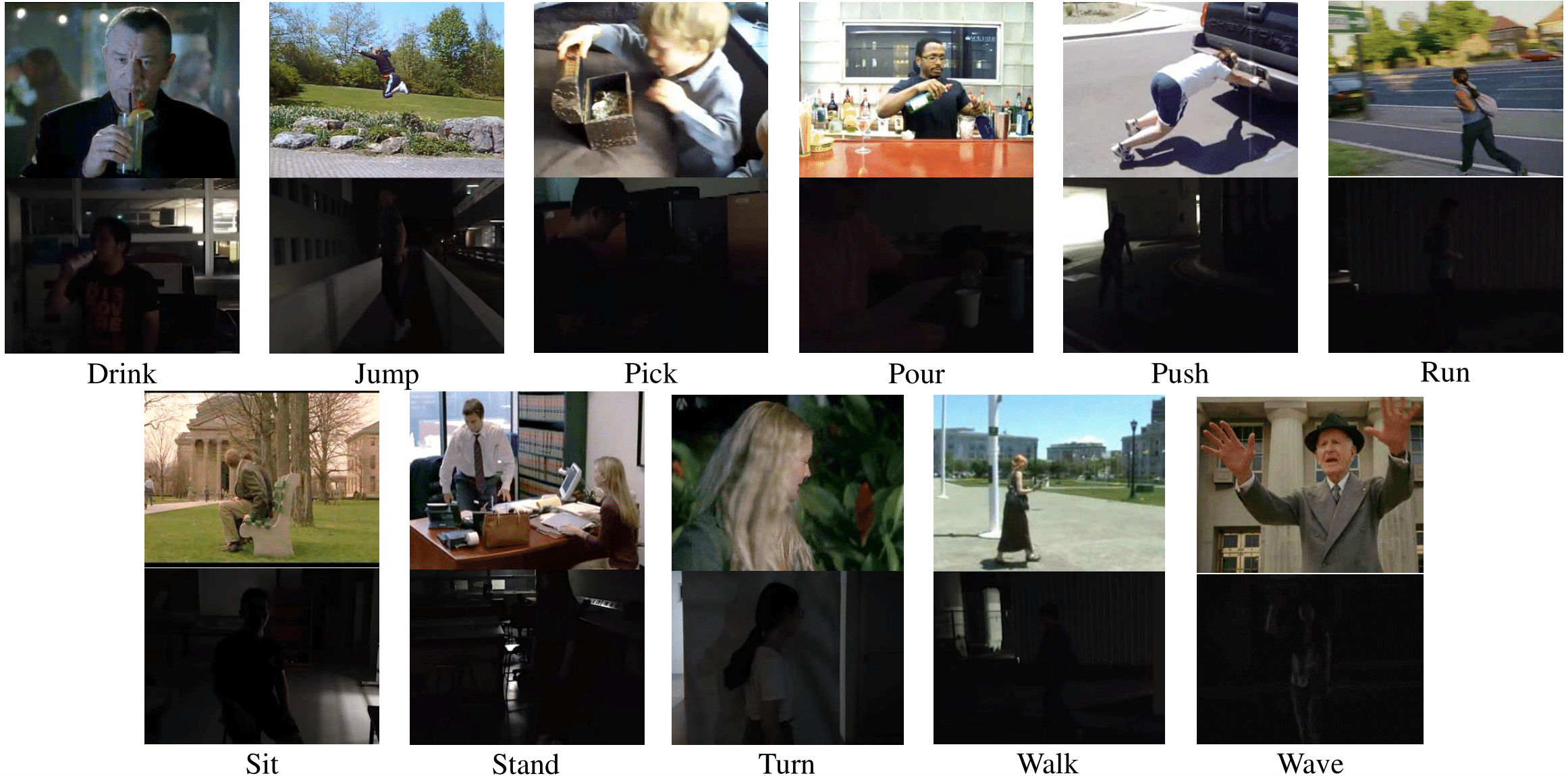

Sampled frames from HMDB-ARID:

Benchmark Results

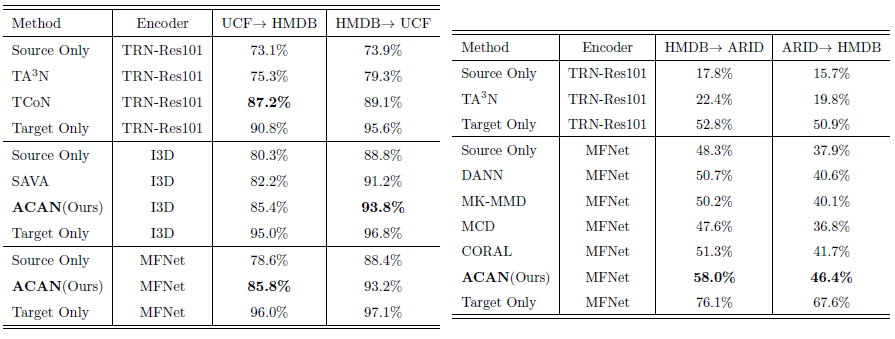

We tested our proposed ACAN on both UCF-HMDBfull and HMDB-ARID, while comparing with previous domain adaptation methods. The results are as follows:

Papers and Download

-

This paper is now accepted by IEEE TNNLS (Transactions on Neural Networks and Learning Systems)!!

-

To learn more about our ACAN network and HMDB-ARID dataset, please click Here for our paper! The IEEE version is available Here!

-

To obtain our HMDB-ARID dataset, you may need the full HMDB51 and ARID dataset. You may find the HMDB51 dataset Here and the ARID dataset Here or the compact version Here. Alternatively, you may download a full copy of HMDB51 from Google Drive.

-

The list of train/test split for HMDB-ARID can be downloaded Here. Note that the list files are organized with each line containing the VideoID, ClassID, VideoFile. For HMDB->ARID setting, “hmdb51_train_da.txt” and “arid_train_da.txt” are the train list with “arid_test_da.txt” as the test list, with the labels in “arid_train_da.txt” ignored following the unsupervised domain adaptation setting, vice versa for the ARID->HMDB setting.

-

For further enquiries, please write to Yuecong Xu at xuyu0014@e.ntu.edu.sg and Jianfei Yang at yang0478@e.ntu.edu.sg. Thank you!

-

Usage of our dataset is licensed under the MIT License, you may view the license here

- If you find our work helpful, you may cite these works below:

@article{xu2022aligning, title={Aligning correlation information for domain adaptation in action recognition}, author={Xu, Yuecong and Cao, Haozhi and Mao, Kezhi and Chen, Zhenghua and Xie, Lihua and Yang, Jianfei}, journal={IEEE Transactions on Neural Networks and Learning Systems}, year={2022}, publisher={IEEE} } @inproceedings{xu2021arid, title={Arid: A new dataset for recognizing action in the dark}, author={Xu, Yuecong and Yang, Jianfei and Cao, Haozhi and Mao, Kezhi and Yin, Jianxiong and See, Simon}, booktitle={Deep Learning for Human Activity Recognition: Second International Workshop, DL-HAR 2020, Held in Conjunction with IJCAI-PRICAI 2020, Kyoto, Japan, January 8, 2021, Proceedings 2}, pages={70--84}, year={2021}, organization={Springer} } - This work is licensed under a Creative Commons Attribution 4.0 International License.

- This work is inspired by previous work in Video-based Domain Adaptation. You may consider citing their work also:

@inproceedings{chen2019temporal, title={Temporal attentive alignment for large-scale video domain adaptation}, author={Chen, Min-Hung and Kira, Zsolt and AlRegib, Ghassan and Yoo, Jaekwon and Chen, Ruxin and Zheng, Jian}, booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision}, pages={6321--6330}, year={2019} } - This work also relies on previous pioneer datasets in action recognition. You may consider citing their works:

@inproceedings{kuehne2011hmdb, title={HMDB: a large video database for human motion recognition}, author={Kuehne, Hildegard and Jhuang, Hueihan and Garrote, Est{\'\i}baliz and Poggio, Tomaso and Serre, Thomas}, booktitle={2011 International conference on computer vision}, pages={2556--2563}, year={2011}, organization={IEEE} }