Attentive Temporal Consistent Network (SSA2lign)

A Primary Exploration on Few-Shot (Source) Video Domain Adaptation (FSVDA)

Paper and Data

Abstract

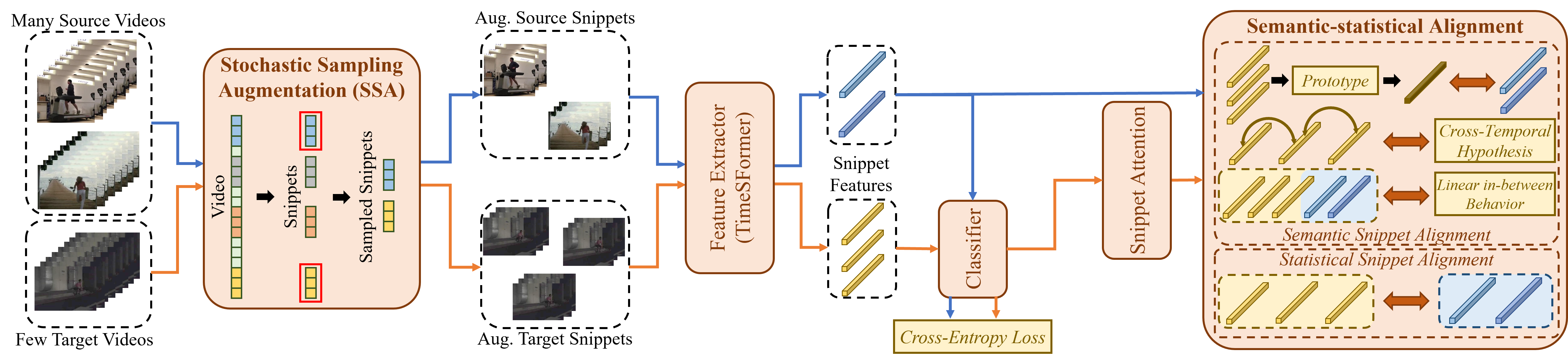

For video models to be transferred and applied seamlessly across video tasks in varied environments, Video Unsupervised Domain Adaptation (VUDA) has been introduced to improve the robustness and transferability of video models. However, current VUDA methods rely on a vast amount of high-quality unlabeled target data, which may not be available in real-world cases. We thus consider a more realistic Few-Shot Video-based Domain Adaptation (FSVDA) scenario where we adapt video models with only a few target video samples. While a few methods have touched upon Few-Shot Domain Adaptation (FSDA) in images and in FSVDA, they rely primarily on spatial augmentation for target domain expansion with alignment performed statistically at the instance level. However, videos contain more knowledge in terms of rich temporal and semantic information, which should be fully considered while augmenting target domains and performing alignment in FSVDA. We propose a novel SSA2lign to address FSVDA at the snippet level, where the target domain is expanded through a simple snippet-level augmentation followed by the attentive alignment of snippets both semantically and statistically, where semantic alignment of snippets is conducted through multiple perspectives. Empirical results demonstrate state-of-the-art performance of SSA2lign across multiple cross-domain action recognition benchmarks.

Structure of SSA2lign

The structure of SSA2lign is as follows:

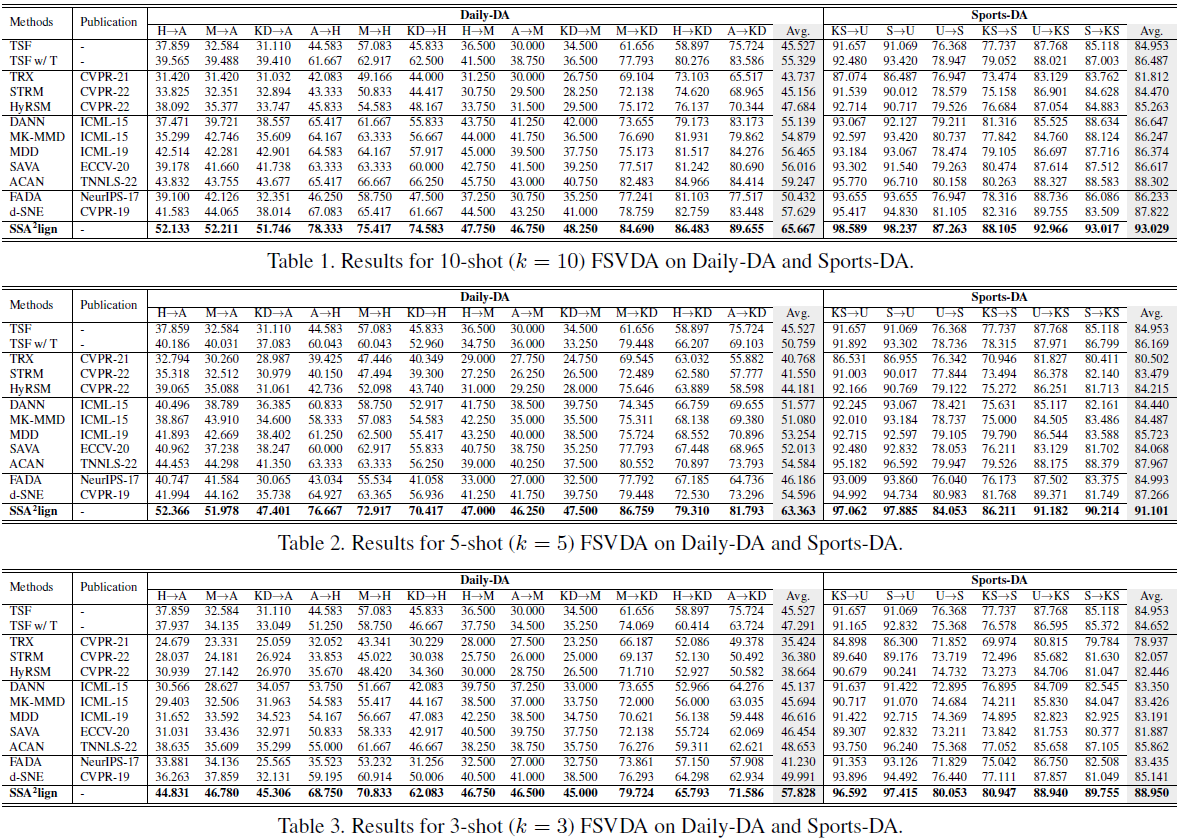

Benchmark Results

We tested our proposed SSA2lign on various benchmarks, including Daily-DA and Sports-DA, while comparing with previous domain adaptation methods (including methods which requires source data access). The results are as follows:

Paper, Code and Data

-

NEW!! The code is now updated at This Repo!

-

This paper is accepted for ICCV 2023!!! See you all in Paris!!!

-

To learn more about our SSA2lign please click Here for our paper!

-

To download the Daily-DA and Sports-DA datasets, click here for more information.

-

For further enquiries, please write to Yuecong Xu at xuyu0014@e.ntu.edu.sg, Jianfei Yang at yang0478@e.ntu.edu.sg and Zhenghua Chen at chen0832@e.ntu.edu.sg. Thank you!

-

This work is licensed under a Creative Commons Attribution 4.0 International License.

-

You may view the license here

-

If you find our work helpful, you may cite these works below:

@article{xu2023augmenting, title={Augmenting and Aligning Snippets for Few-Shot Video Domain Adaptation}, author={Xu, Yuecong and Yang, Jianfei and Zhou, Yunjiao and Chen, Zhenghua and Wu, Min and Li, Xiaoli}, journal={arXiv preprint arXiv:2303.10451}, year={2023} }