Attentive Temporal Consistent Network (ATCoN)

A Primary Exploration on Source-Free Video Domain Adaptation (SFVDA)

Paper and Data

Abstract

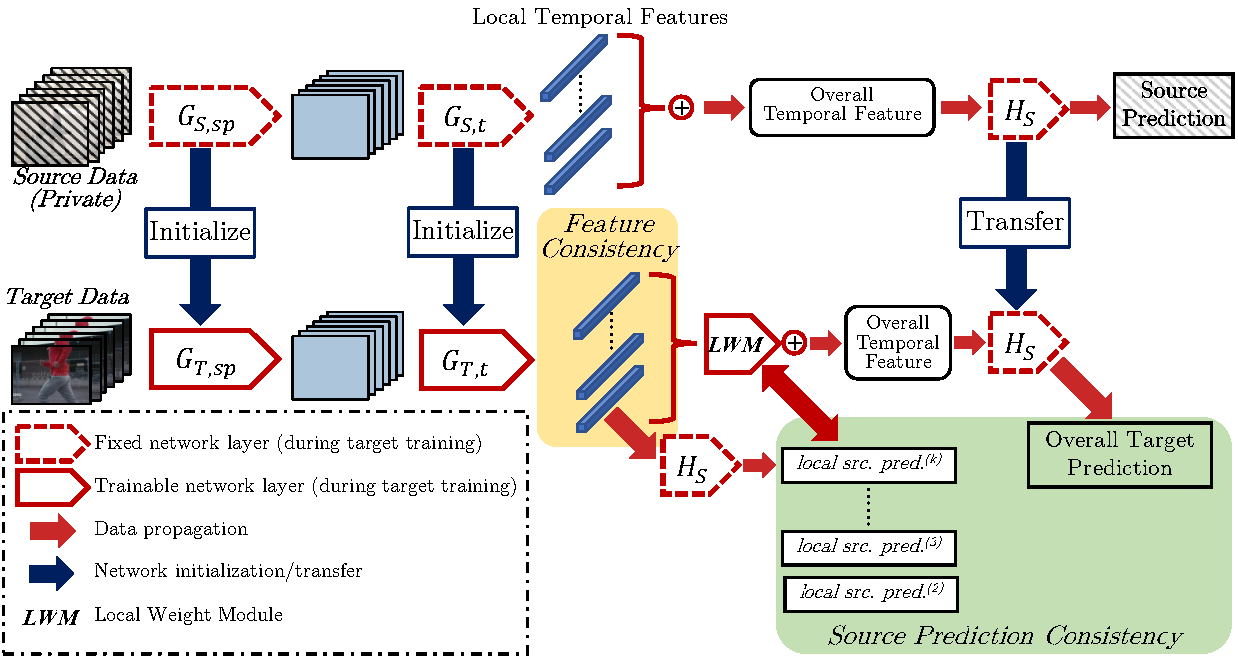

Video-based Unsupervised Domain Adaptation (VUDA) methods improve the robustness of video models, enabling them to be applied to action recognition tasks across different environments. However, these methods require constant access to source data during the adaptation process. Yet in many real-world applications, subjects and scenes in the source video domain should be irrelevant to those in the target video domain. With the increasing emphasis on data privacy, such methods that require source data access would raise serious privacy issues. Therefore, to cope with such concern, a more practical domain adaptation scenario is formulated as the Source-Free Video-based Domain Adaptation (SFVDA). Though there are a few methods for Source-Free Domain Adaptation (SFDA) on image data, these methods yield degenerating performance in SFVDA due to the multi-modality nature of videos, with the existence of additional temporal features. In this paper, we propose a novel Attentive Temporal Consistent Network (ATCoN) to address SFVDA by learning temporal consistency, guaranteed by two novel consistency objectives, namely feature consistency and source prediction consistency, performed across local temporal features. ATCoN further constructs effective overall temporal features by attending to local temporal features based on prediction confidence. Empirical results demonstrate the state-of-the-art performance of ATCoN across various cross-domain action recognition benchmarks.

Structure of ATCoN

The structure of ATCoN is as follows:

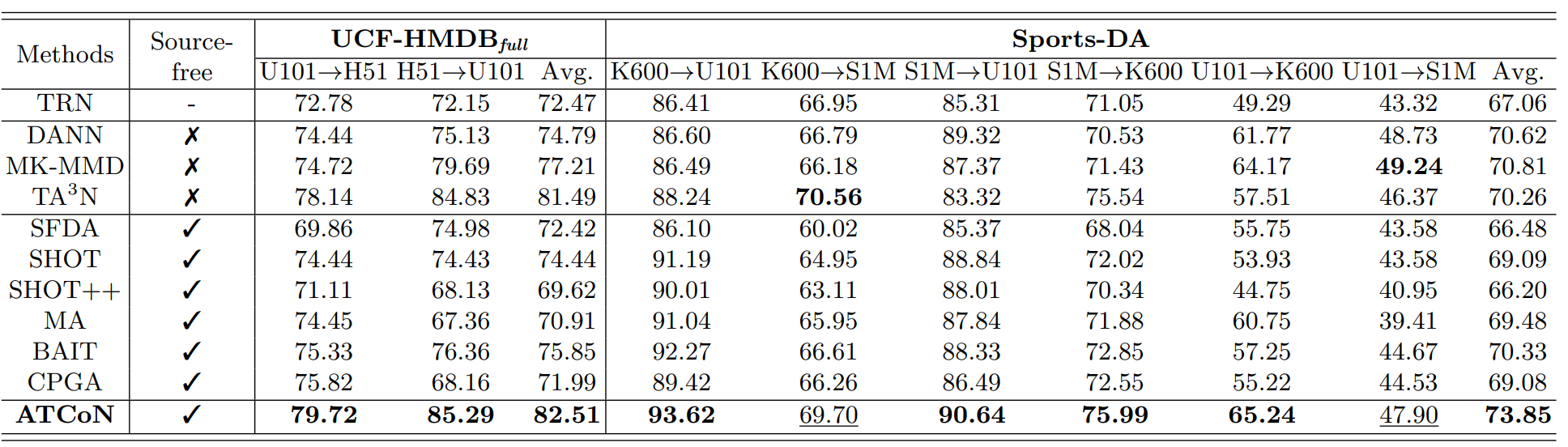

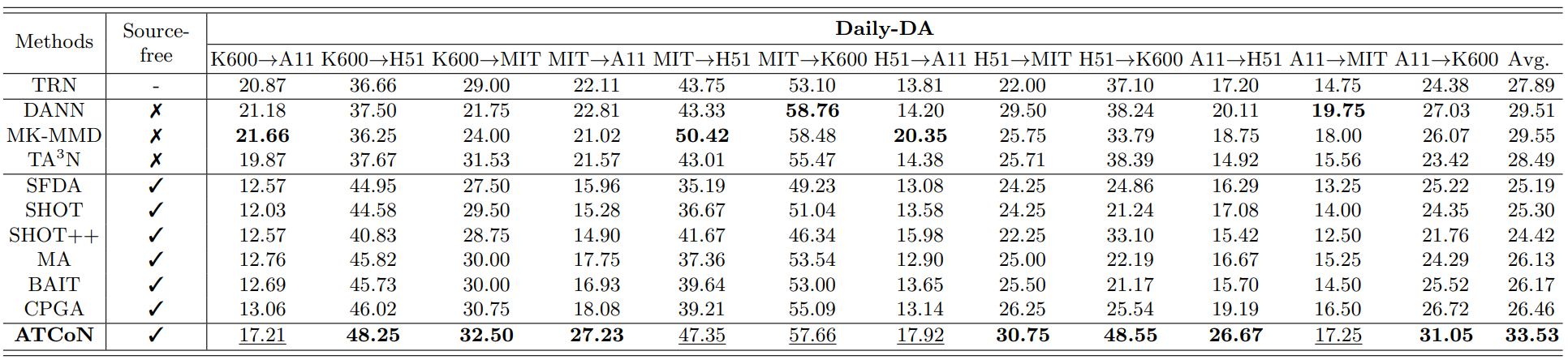

Benchmark Results

We tested our proposed ATCoN on various benchmarks, including UCF-HMDBfull, Daily-DA and Sports-DA, while comparing with previous domain adaptation methods (including methods which requires source data access). The results are as follows:

Paper and Data

-

This paper has been accepted by ECCV 2022!!!

-

To learn more about our ATCoN please click Here for our paper! Click Here for our open code!

-

To download the Daily-DA and Sports-DA datasets, click here for more information.

-

To download the UCF-HMDBfull dataset, click here for more information.

-

For further enquiries, please write to Yuecong Xu at xuyu0014@e.ntu.edu.sg, Jianfei Yang at yang0478@e.ntu.edu.sg and Zhenghua Chen at chen0832@e.ntu.edu.sg. Thank you!

-

This work is licensed under a Creative Commons Attribution 4.0 International License.

-

You may view the license here

-

If you find our work helpful, you may cite these works below:

@inproceedings{xu2022source, title={Source-free video domain adaptation by learning temporal consistency for action recognition}, author={Xu, Yuecong and Yang, Jianfei and Cao, Haozhi and Wu, Keyu and Wu, Min and Chen, Zhenghua}, booktitle={European Conference on Computer Vision}, pages={147--164}, year={2022}, organization={Springer} }