Action Recognition in the Dark Dataset (ARID Dataset)

Download

A Benchmark Dataset for Action Recognition in Dark Videos

Updates (ARID v1.5)

ARID has been honored to be the benchmark dataset of the UG2+ Challenge 2021/2022 held in conjunction with CVPR 2021/2022. Over this period we have updated ARID by expanding ARID to include more scenes and actions to better facilitate action recognition in low illumination, through both supervised and semi-supervised methods. The ARID (v1.5) has been released as shown here. It now contains 5,572 clips with more than 320 clips per action, with a total of 11 actions. These clips are shot in 24 scenes (12 indoor, 12 outdoor) with more than 15 volunteers. The ARID (v1.5) is also part of the Daily-DA dataset. The ARID (v1) dataset is still available for download!

Abstract (ARID v1)

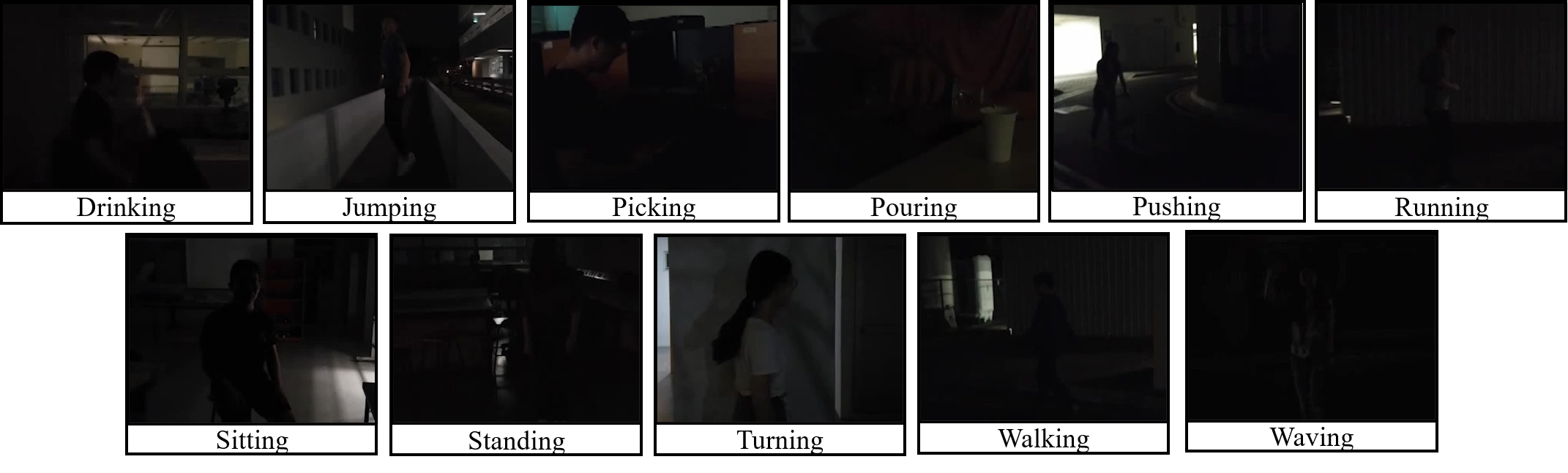

The task of action recognition in dark videos is useful in various scenarios, e.g., night surveillance and self-driving at night. Though progress has been made in action recognition task for videos in normal illumination, few have studied action recognition in the dark. This is partly due to the lack of sufficient datasets for such a task. In this paper, we explored the task of action recognition in dark videos. We bridge the gap of the lack of data for this task by collecting a new dataset: the Action Recognition in the Dark (ARID) dataset. It consists of over 3,780 video clips with 11 action categories. To the best of our knowledge, it is the first dataset focused on human actions in dark videos. To gain further understanding of our ARID dataset, we analyze the ARID dataset in detail and showed its necessity over synthetic dark videos. Additionally, we benchmark the performance of several current action recognition models on our dataset and explored potential methods for increasing their performances. Our results show that current action recognition models and frame enhancement methods may not be effective solutions for the task of action recognition in dark videos.

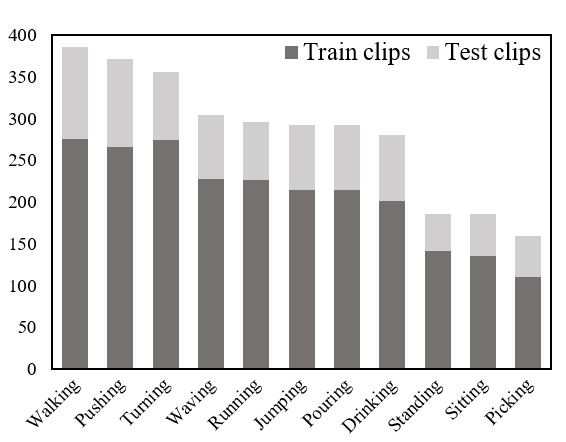

Basic Statistics

The distribution of clips among the 11 classes is as follows:

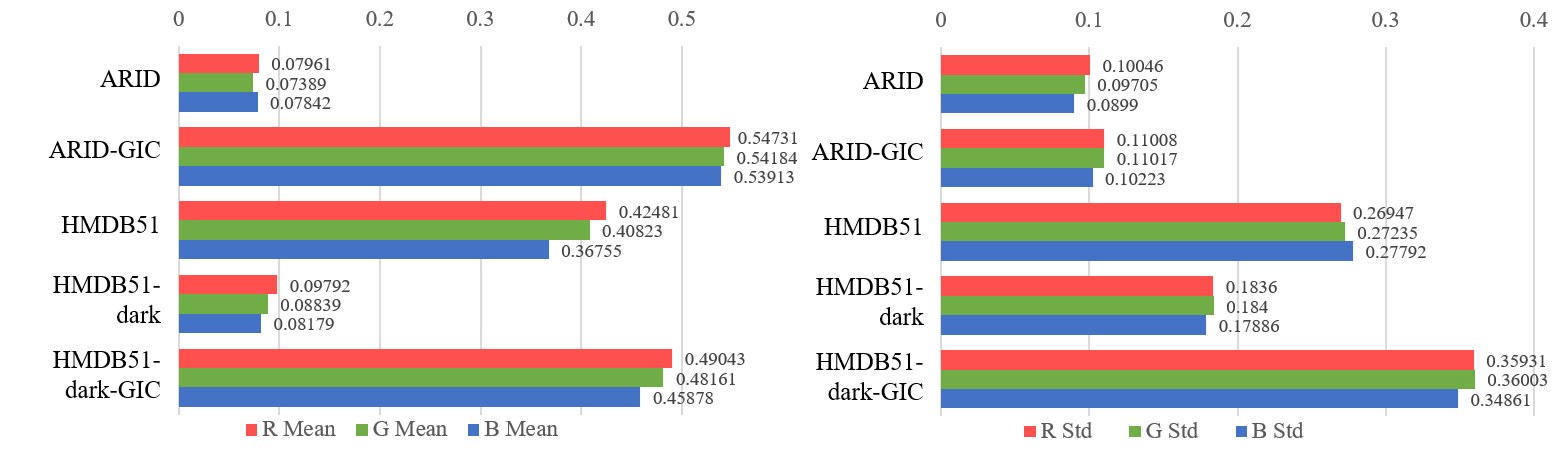

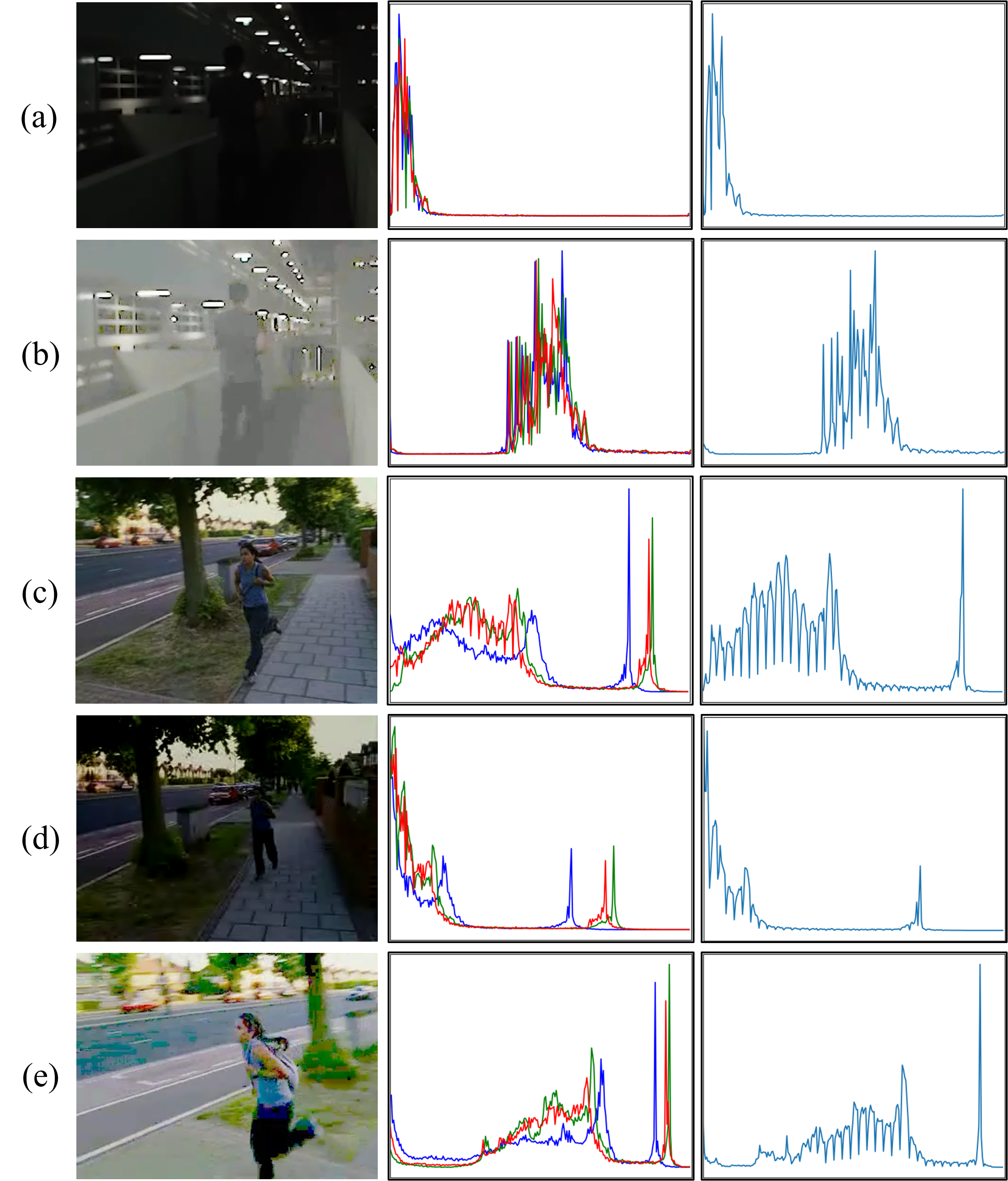

Comparisons with HMDB51(-dark)

We compare our ARID dataset statistically with HMDB51/HMDB51-dark, with the results and sampled frame as shown:

Benchmark Results

Here we present some benchmark results of previous action recognition models: (Across three splits)

| Method | Top-1 Accuracy | Top-5 Accuracy |

|---|---|---|

| VGG-Two Stream | 32.08% | 90.76% |

| TSN | 57.96% | 94.17% |

| C3D | 40.34% | 94.17% |

| I3D-RGB | 54.68% | 97.69% |

| I3D-Two Stream | 72.78% | 99.39% |

| 3D-ResNet (50) | 71.08% | 99.39% |

The 5th UG2+ Challenge Workshop

- I am co-organizing the 5th UG2+ Workshop with our ARID dataset, to be held in conjunction with CVPR2022. For more information about this workshop, click here.

The 4th UG2+ Challenge Workshop

-

I co-organized the 4th UG2+ Workshop with our ARID dataset, held in conjunction with CVPR2021. For more information about this workshop, click here.

-

Note that the data in this workshop is an expansion of the current dataset, denoted as ARID v1.1. ARID v1.1 would NOT be released with the release of v1.5.

-

A summary of this workshop is available in this paper!

-

I would like to thank Wuyang Chen, Prof.Zhangyang Wang from UT Austin, Jianxiong Yin from NVIDIA AI Tech Center, as well as Jianfei Yang, and Haozhi Cao from NTU, Singapore for their generous help during the challenge and workshop, as well as the construction and expansion of ARID. Thank you!

Papers and Download

-

To learn more about our ARID dataset, please click Here for our paper!

-

Our dataset version 1.5 (v1.5) could be downloaded Here, with the annotations and data. The v1.5 version is leveraged in Daily-DA dataset.

-

The original version (v1.0) can still be downloaded Here or the compact version Here, with the annotations and data. The v1.5 version is leveraged in HMDB-ARID dataset.

-

For further enquiries, please write to Yuecong Xu at xuyu0014@e.ntu.edu.sg and Jianfei Yang at yang0478@e.ntu.edu.sg. Thank you!

-

Usage of our dataset is licensed under the CC BY 4.0 License, you may view the license here

-

You may also click the zip icon above to download

- If you find our work helpful, you may cite our work at:

@inproceedings{xu2021arid, title={Arid: A new dataset for recognizing action in the dark}, author={Xu, Yuecong and Yang, Jianfei and Cao, Haozhi and Mao, Kezhi and Yin, Jianxiong and See, Simon}, booktitle={Deep Learning for Human Activity Recognition: Second International Workshop, DL-HAR 2020, Held in Conjunction with IJCAI-PRICAI 2020, Kyoto, Japan, January 8, 2021, Proceedings 2}, pages={70--84}, year={2021}, organization={Springer} } -

We provide a sample code to initiate, please check here

-

The pretrained models can be found here, please put them at “./network/pretrained” folder

- This work is licensed under a Creative Commons Attribution 4.0 International License.