Endo and eXo-TEmporal Regularized Network (EXTERN)

A Primary Exploration on Black-box Video Domain Adaptation (BVDA)

Paper and Data

Abstract

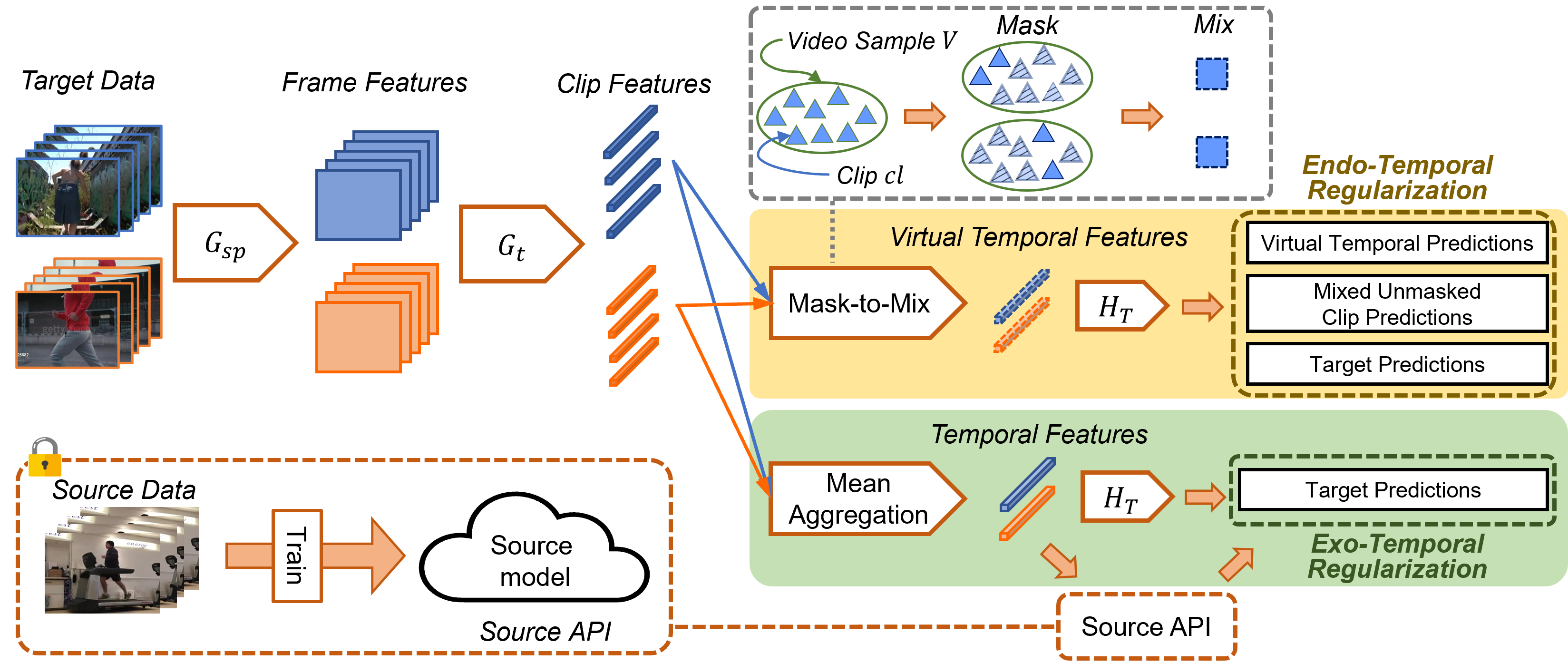

To enable video models to be applied seamlessly across video tasks in different environments, various Video Unsupervised Domain Adaptation (VUDA) methods have been proposed to improve the robustness and transferability of video models. Despite improvements made in model robustness, these VUDA methods require access to both source data and source model parameters for adaptation, raising serious data privacy and model portability issues. To cope with the above concerns, this paper firstly formulates Black-box Video Domain Adaptation (BVDA) as a more realistic yet challenging scenario where the source video model is provided only as a black-box predictor. While a few methods for Black-box Domain Adaptation (BDA) are proposed in the image domain, these methods cannot apply to the video domain since video modality has more complicated temporal features that are harder to align. To address BVDA, we propose a novel Endo and eXo-TEmporal Regularized Network (EXTERN) by applying mask-to-mix strategies and video-tailored regularizations: endo-temporal regularization and exo-temporal regularization, performed across both clip and temporal features, while distilling knowledge from the predictions obtained from the black-box predictor. Empirical results demonstrate the state-of-the-art performance of EXTERN across various cross-domain closed-set and partial-set action recognition benchmarks, which even surpasses most existing video domain adaptation methods with source data accessibility.

Structure of EXTERN

The structure of EXTERN is as follows:

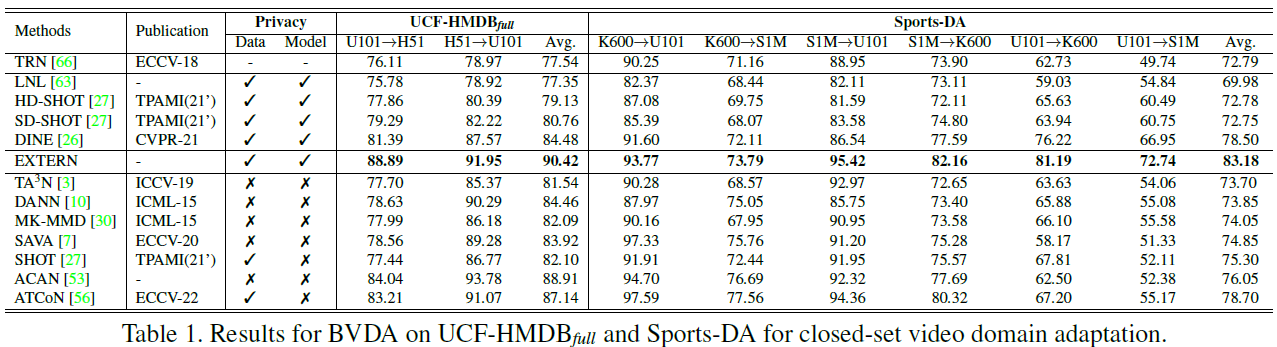

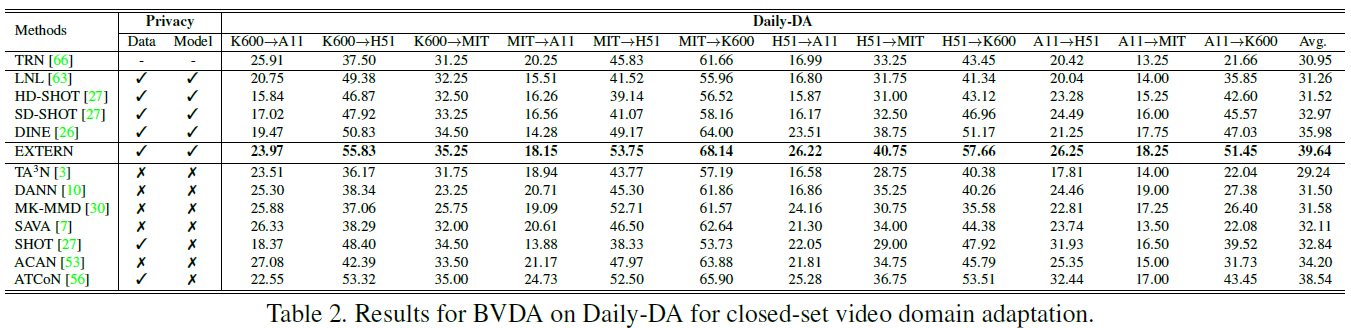

Benchmark Results (To Update)

We tested our proposed EXTERN on various benchmarks, including closed-set benchmarks UCF-HMDBfull, Daily-DA and Sports-DA, as well as partial-set benchmarks UCF-HMDBpartial, MiniKinetics-UCF, and HMDB-ARIDpartial, while comparing with previous domain adaptation methods (including methods which requires source data access or source model access). The results are as follows:

Paper and Data

-

To learn more about our EXTERN please click Here for our paper!

-

To download the Daily-DA and Sports-DA datasets, click here for more information.

-

To download the UCF-HMDBpartial, MiniKinetics-UCF, and HMDB-ARIDpartial datasets, click here for more information.

-

To download the UCF-HMDBfull dataset, click here for more information.

-

For further enquiries, please write to Yuecong Xu at xuyu0014@e.ntu.edu.sg, Jianfei Yang at yang0478@e.ntu.edu.sg and Zhenghua Chen at chen0832@e.ntu.edu.sg. Thank you!

-

This work is licensed under a Creative Commons Attribution 4.0 International License.

-

You may view the license here

-

If you find our work helpful, you may cite these works below:

@article{xu2022extern, title={EXTERN: Leveraging endo-temporal regularization for black-box video domain adaptation}, author={Xu, Yuecong and Yang, Jianfei and Wu, Min and Li, Xiaoli and Xie, Lihua and Chen, Zhenghua}, journal={arXiv preprint arXiv:2208.05187}, year={2022} }